Apache Hive

Apache Hive is a fault tolerant distributed data warehouse system built on top of Hadoop which uses a SQL type langage called HiveSQL for reading, writing, and analyzing large datasets. Hive supports Online Analytical Processing (OLAP) and was not designed for Online Transaction Processing (OLTP).

Hive enables developers and users to use SQL-like syntax and features for extract/transform/loading (ETL), reporting and data analytics. Data can then be stored in various formats in various Hadoop databases. HiveQL queries are translated into the format required for the database system. Hive provides standard operations such as filters, joins or aggregations.

Unlike relational databases, Hive does not use the schema-on-write (SoW) approach, but uses the schema-on-read (SoR) approach.

Data is always stored as is in Hadoop and is only checked against a specific schema when requested. This gives the opportunity to load data significantly faster. Also, different schemas can be applied to the same database.

- Learn more

- Official website

Related articles

CDP part 6: end-to-end data lakehouse ingestion pipeline with CDP

Categories: Big Data, Data Engineering, Learning | Tags: NiFi, Business intelligence, Data Engineering, Iceberg, Spark, Big Data, Cloudera, CDP, Data Analytics, Data Lake, Data Warehouse

In this hands-on lab session we demonstrate how to build an end-to-end big data solution with Cloudera Data Platform (CDP) Public Cloud, using the infrastructure we have deployed and configured over…

Jul 24, 2023

Comparison of database architectures: data warehouse, data lake and data lakehouse

Categories: Big Data, Data Engineering | Tags: Data Governance, Infrastructure, Iceberg, Parquet, Spark, Data Lake, Data lakehouse, Data Warehouse, File Format

Database architectures have experienced constant innovation, evolving with the appearence of new use cases, technical constraints, and requirements. From the three database structures we are comparing…

By Gonzalo ETSE

May 17, 2022

Internship in Big Data infrastructure with TDP

Categories: Infrastructure, Learning | Tags: Cyber Security, DevOps, Java, Hadoop, IaC, Internship, TDP

Job Description Big Data and distributed computing is at Adaltas’ core. We support our partners in the deployment, maintenance and optimization of some of France’s largest clusters. Adaltas is also an…

By Daniel HARTY

Oct 25, 2021

H2O in practice: a protocol combining AutoML with traditional modeling approaches

Categories: Data Science, Learning | Tags: Automation, Cloud, H2O, Machine Learning, MLOps, On-premises, Open source, Python, XGBoost

H20 comes with a lot of functionalities. The second part of the series H2O in practice proposes a protocol to combine AutoML modeling with traditional modeling and optimization approach. The objective…

Nov 12, 2021

Internship in Data Engineering

Categories: Front End, Learning | Tags: Metrics, Monitoring, Hive, Kafka, Delta Lake, Elasticsearch, IaC, Internship, Kubernetes, Streaming

Job Description Data is a valuable business asset. Some call it the new oil. The data engineer collects, transform and refine raw data into information that can be used by business analysts and data…

By David WORMS

Oct 25, 2021

H2O in practice: a Data Scientist feedback

Categories: Data Science, Learning | Tags: Automation, Cloud, H2O, Machine Learning, MLOps, On-premises, Open source, Python

Automated machine learning (AutoML) platforms are gaining popularity and becoming a new important tool in the data scientists’ toolbox. A few months ago, I introduced H2O, an open-source platform for…

Sep 29, 2021

Storage size and generation time in popular file formats

Categories: Data Engineering, Data Science | Tags: Avro, HDFS, Hive, ORC, Parquet, Big Data, Data Lake, File Format, JavaScript Object Notation (JSON)

Choosing an appropriate file format is essential, whether your data transits on the wire or is stored at rest. Each file format comes with its own advantages and disadvantages. We covered them in a…

Mar 22, 2021

Build your open source Big Data distribution with Hadoop, HBase, Spark, Hive & Zeppelin

Categories: Big Data, Infrastructure | Tags: Maven, Hadoop, HBase, Hive, Spark, Git, Release and features, TDP, Unit tests

The Hadoop ecosystem gave birth to many popular projects including HBase, Spark and Hive. While technologies like Kubernetes and S3 compatible object storages are growing in popularity, HDFS and YARN…

Dec 18, 2020

Faster model development with H2O AutoML and Flow

Categories: Data Science, Learning | Tags: Automation, Cloud, H2O, Machine Learning, MLOps, On-premises, Open source, Python

Building Machine Learning (ML) models is a time-consuming process. It requires expertise in statistics, ML algorithms, and programming. On top of that, it also requires the ability to translate a…

Dec 10, 2020

Rebuilding HDP Hive: patch, test and build

Categories: Big Data, Infrastructure | Tags: Maven, Java, Hive, Git, GitHub, Release and features, TDP, Unit tests

The Hortonworks HDP distribution will soon be deprecated in favor of Cloudera’s CDP. One of our clients wanted a new Apache Hive feature backported into HDP 2.6.0. We thought it was a good opportunity…

Oct 6, 2020

Download datasets into HDFS and Hive

Categories: Big Data, Data Engineering | Tags: Business intelligence, Data Engineering, Data structures, Database, Hadoop, HDFS, Hive, Big Data, Data Analytics, Data Lake, Data lakehouse, Data Warehouse

Introduction Nowadays, the analysis of large amounts of data is becoming more and more possible thanks to Big data technology (Hadoop, Spark,…). This explains the explosion of the data volume and the…

By Aida NGOM

Jul 31, 2020

Comparison of different file formats in Big Data

Categories: Big Data, Data Engineering | Tags: Business intelligence, Data structures, Avro, HDFS, ORC, Parquet, Batch processing, Big Data, CSV, JavaScript Object Notation (JSON), Kubernetes, Protocol Buffers

In data processing, there are different types of files formats to store your data sets. Each format has its own pros and cons depending upon the use cases and exists to serve one or several purposes…

By Aida NGOM

Jul 23, 2020

Introducing Apache Airflow on AWS

Categories: Big Data, Cloud Computing, Containers Orchestration | Tags: PySpark, Learning and tutorial, Airflow, Oozie, Spark, AWS, Docker, Python

Apache Airflow offers a potential solution to the growing challenge of managing an increasingly complex landscape of data management tools, scripts and analytics processes. It is an open-source…

May 5, 2020

Hadoop Ozone part 1: an introduction of the new filesystem

Categories: Infrastructure | Tags: HDFS, Ozone, Cluster, Kubernetes

Hadoop Ozone is an object store for Hadoop. It is designed to scale to billions of objects of varying sizes. It is currently in development. The roadmap is available on the project wiki. This article…

Dec 3, 2019

Running Apache Hive 3, new features and tips and tricks

Categories: Big Data, Business Intelligence, DataWorks Summit 2019 | Tags: JDBC, LLAP, Druid, Hadoop, Hive, Kafka, Release and features

Apache Hive 3 brings a bunch of new and nice features to the data warehouse. Unfortunately, like many major FOSS releases, it comes with a few bugs and not much documentation. It is available since…

Jul 25, 2019

Druid and Hive integration

Categories: Big Data, Business Intelligence, Tech Radar | Tags: LLAP, OLAP, Druid, Hive, Data Analytics, SQL

This article covers the integration between Hive Interactive (LDAP) and Druid. One can see it as a complement of the Ultra-fast OLAP Analytics with Apache Hive and Druid article. Tools description…

Jun 17, 2019

Publish Spark SQL DataFrame and RDD with Spark Thrift Server

Categories: Data Engineering | Tags: Thrift, JDBC, Hadoop, Hive, Spark, SQL

The distributed and in-memory nature of the Spark engine makes it an excellent candidate to expose data to clients which expect low latencies. Dashboards, notebooks, BI studios, KPIs-based reports…

Mar 25, 2019

Apache Knox made easy!

Categories: Big Data, Cyber Security, Adaltas Summit 2018 | Tags: Kerberos, LDAP, Active Directory, Knox, Ranger, REST

Apache Knox is the secure entry point of a Hadoop cluster, but can it also be the entry point for my REST applications? Apache Knox overview Apache Knox is an application gateway for interacting in a…

Feb 4, 2019

Data Lake ingestion best practices

Categories: Big Data, Data Engineering | Tags: NiFi, Data Governance, HDF, Operation, Avro, Hive, ORC, Spark, Data Lake, File Format, Protocol Buffers, Registry, Schema

Creating a Data Lake requires rigor and experience. Here are some good practices around data ingestion both for batch and stream architectures that we recommend and implement with our customers…

By David WORMS

Jun 18, 2018

Accelerating query processing with materialized views in Apache Hive

Categories: Business Intelligence, DataWorks Summit 2018 | Tags: Calcite, OLAP, Druid, Hive, Release and features, SQL

The new materialized view feature is coming in Apache Hive 3.0. Jesus Camacho Rodriguez from Hortonworks held a talk ”Accelerating query processing with materialized views in Apache Hive” about it…

May 31, 2018

Present and future of Hadoop workflow scheduling: Oozie 5.x

Categories: Big Data, DataWorks Summit 2018 | Tags: Hadoop, Hive, Oozie, Sqoop, CDH, HDP, REST

During the DataWorks Summit Europe 2018 in Berlin, I had the opportunity to attend a breakout session on Apache Oozie. It covers the new features released in Oozie 5.0, including future features of…

May 23, 2018

Essential questions about Time Series

Categories: Big Data | Tags: Grafana, Druid, HBase, Hive, ORC, Data Science, Elasticsearch, IOT

Today, the bulk of Big Data is temporal. We see it in the media and among our customers: smart meters, banking transactions, smart factories, connected vehicles … IoT and Big Data go hand in hand. We…

By David WORMS

Mar 18, 2018

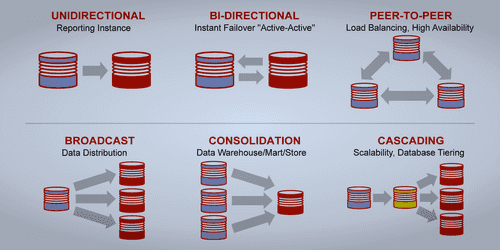

MariaDB integration with Hadoop

Categories: Infrastructure | Tags: Database, HA, MariaDB, Hadoop, Hive

During a workshop with one of our customers, Adaltas has identified a potential risk to use MariaDB’s High Availability (HA) strategy. Since the customer selected Cloudera’s CDH 5 distribution, the…

By David WORMS

Jul 31, 2017

Oracle DB synchrnozation to Hadoop with CDC

Categories: Data Engineering | Tags: CDC, GoldenGate, Oracle, Hive, Sqoop, Data Warehouse

This note is the result of a discussion about the synchronization of data written in a database to a warehouse stored in Hadoop. Thanks to Claude Daub from GFI who wrote it and who authorizes us to…

By David WORMS

Jul 13, 2017

Hive Metastore HA with DBTokenStore: Failed to initialize master key

Categories: Big Data, DevOps & SRE | Tags: Infrastructure, Hive, Bug

This article describes my little adventure around a startup error with the Hive Metastore. It shall be reproducable with any secure installation, meaning with Kerberos, with high availability enabled…

By David WORMS

Jul 21, 2016

Hive, Calcite and Druid

Categories: Big Data | Tags: Business intelligence, Database, Druid, Hadoop, Hive

BI/OLAP requires interactive visualization of complex data streams: Real time bidding events User activity streams Voice call logs Network trafic flows Firewall events Application KPIs Traditionnal…

By David WORMS

Jul 14, 2016

Composants for CDH and HDP

Categories: Big Data | Tags: Flume, Hortonworks, Hadoop, Hive, Oozie, Sqoop, Zookeeper, Cloudera, CDH, HDP

I was interested to compare the different components distributed by Cloudera and HortonWorks. This also gives us an idea of the versions packaged by the two distributions. At the time of this writting…

By David WORMS

Sep 22, 2013

Splitting HDFS files into multiple hive tables

Categories: Data Engineering | Tags: Flume, Pig, HDFS, Hive, Oozie, SQL

I am going to show how to split a CSV file stored inside HDFS as multiple Hive tables based on the content of each record. The context is simple. We are using Flume to collect logs from all over our…

By David WORMS

Sep 15, 2013

Oracle and Hive, how data are published?

Categories: Big Data | Tags: Oracle, Hive, Sqoop, Data Lake

In the past few days, I’ve published 3 related articles: a first one covering the option to integrate Oracle and Hadoop, a second one explaining how to install and use the Oracle SQL Connector with…

By David WORMS

Jul 6, 2013

Options to connect and integrate Hadoop with Oracle

Categories: Data Engineering | Tags: Database, Java, Oracle, R, RDBMS, Avro, HDFS, Hive, MapReduce, Sqoop, NoSQL, SQL

I will list the different tools and libraries available to us developers in order to integrate Oracle and Hadoop. The Oracle SQL Connector for HDFS described below is covered in a follow up article…

By David WORMS

May 15, 2013

Oracle to Apache Hive with the Oracle SQL Connector

Categories: Business Intelligence | Tags: Oracle, HDFS, Hive, Network

In a previous article published last week, I introduced the choices available to connect Oracle and Hadoop. In a follow up article, I covered the Oracle SQL Connector, its installation and integration…

By David WORMS

May 27, 2013

Apache Hive Essentials How-to by Darren Lee

Categories: Business Intelligence, Learning | Tags: UDF, Hadoop, Hive, File Format, SQL

Recently, I’ve been ask to review a new book on Apache Hive called “Apache Hive Essentials How-to” (edit: the second edition is now available) written by Darren Lee and published by Packt Publishing…

By David WORMS

Apr 23, 2013

Two Hive UDAF to convert an aggregation to a map

Categories: Data Engineering | Tags: Java, HBase, Hive, File Format

I am publishing two new Hive UDAF to help with maps in Apache Hive. The source code is available on GitHub in two Java classes: “UDAFToMap” and “UDAFToOrderedMap” or you can download the jar file. The…

By David WORMS

Mar 6, 2012

HDFS and Hive storage - comparing file formats and compression methods

Categories: Big Data | Tags: Business intelligence, Hive, ORC, Parquet, File Format

A few days ago, we have conducted a test in order to compare various Hive file formats and compression methods. Among those file formats, some are native to HDFS and apply to all Hadoop users. The…

By David WORMS

Mar 13, 2012

Timeseries storage in Hadoop and Hive

Categories: Data Engineering | Tags: CRM, timeseries, Tuning, Hadoop, HDFS, Hive, File Format

In the next few weeks, we will be exploring the storage and analytic of a large generated dataset. This dataset is composed of CRM tables associated to one timeserie table of about 7,000 billiard rows…

By David WORMS

Jan 10, 2012