Apache Knox made easy!

Feb 4, 2019

- Categories

- Big Data

- Cyber Security

- Adaltas Summit 2018

- Tags

- LDAP

- Active Directory

- Knox

- Ranger

- Kerberos

- REST [more][less]

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

Apache Knox is the secure entry point of a Hadoop cluster, but can it also be the entry point for my REST applications?

Apache Knox overview

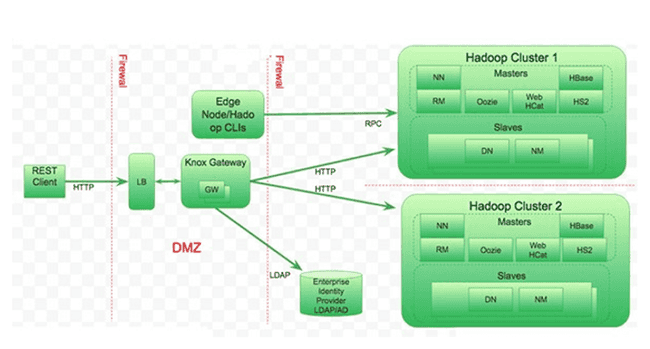

Apache Knox is an application gateway for interacting in a secure way with the REST APIs and the user interfaces of one or more Hadoop clusters. Out of the box it provides:

- Most common services support (WebHDFS, Apache Oozie, Apache Hive/JDBC, etc.) and user interfaces (Apache Ambari, Apache Ranger, etc.) of a Hadoop cluster;

- Strong integration with enterprise authentication systems (Microsoft Active Directory, LDAP, Kerberos, etc.);

- And several other useful features.

On the other hand, it is not an alternative to Kerberos for strong authentication of an Hadoop cluster, nor a channel for acquiring or exporting large volumes of data.

We can define the benefits of the gateway in four different categories:

- Enhanced security through the exposure of REST and HTTP services without revealing the details of the Hadoop cluster, the filter on the vulnerabilities of web applications or the use of the SSL protocol on services that do not have this possibility;

- Centralized control through the use of a single gateway, which facilitates auditing and authorizations (with Apache Ranger);

- Simplified access thanks to the encapsulation of services with Kerberos or the use of a single SSL certificate;

- Enterprise integration through leading market solutions (Microsoft Active Directory, LDAP, Kerberos, etc) or using custom solutions (Apache Shiro, etc).

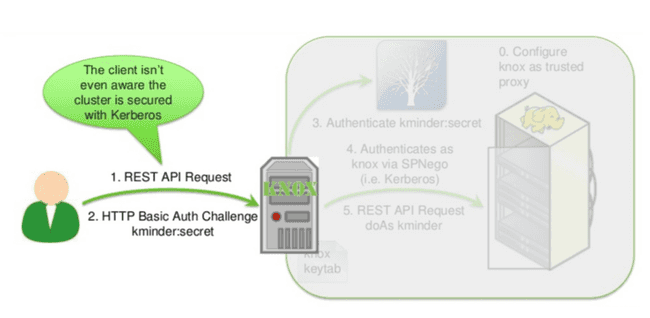

Kerberos encapsulation

Encapsulation is mainly used for products that are incompatible with the Kerberos protocol. The user provides his username and password via the use of the HTTP Basic Auth protocol.

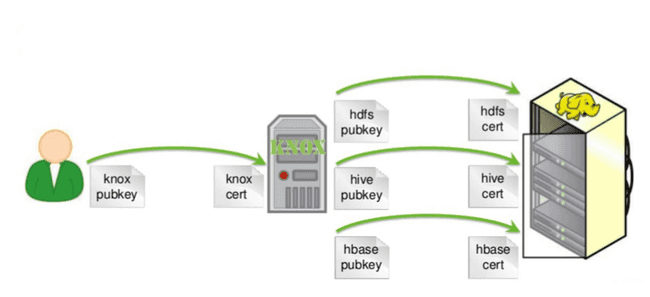

Simplified management of client certificates

The user relies solely on the Apache Knox certificate, so we centralize the certificates of the different services on the Apache Knox servers and not on all the clients. Very useful when revoking and creating a new certificate.

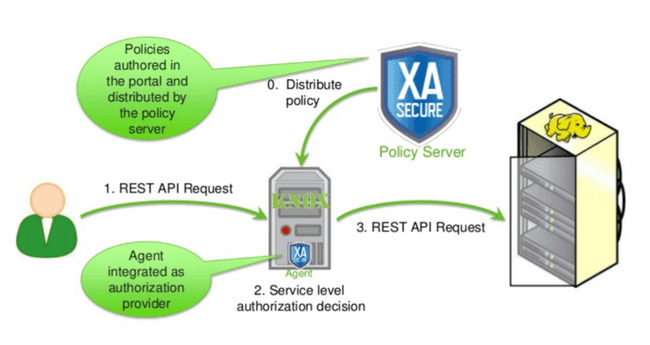

Apache Ranger integration

Apache Knox includes an Apache Ranger agent to check the permissions of users who want to access cluster ressources.

Hadoop URLs VS Knox URLs

Using Apache Knox URLs obscures the cluster architecture and allows users to remember only one URL.

| Service | Hadoop URL | Apache Knox URL |

|---|---|---|

| WebHDFS | http://namenode-host:50070/webhdfs | https://knox-host:8443/gateway/default/webhdfs |

| WebHCat | http://webhcat-host:50111/templeton | https://knox-host:8443/gateway/default/templeton |

| Apache Oozie | http://oozie-host:11000/oozie | https://knox-host:8443/gateway/default/oozie |

| Apache HBase | http://hbase-host:60080 | https://knox-host:8443/gateway/default/hbase |

| Apache Hive | http://hive-host:10001/cliservice | https://knox-host:8443/gateway/default/hive |

Customizing Apache Knox

To configure the Apache Knox gateway, we need to modify the topology type files. These files consist of three components: Providers, HA Provider and Services.

Topology

You can find the topology files in the topologies directory sandbox.xml, the URL will be: https://knox-host:8443/gateway/sandbox/webhdfs.

Here is an example of a topology file:

<topology>

<gateway>

<!-- Authentication provider -->

<provider>

<role>authentication</role>

<name>ShiroProvider</name>

<enabled>true</enabled>

[...]

</provider>

<!-- Identity assertion provider -->

<provider>

<role>identity-assertion</role>

<name>HadoopGroupProvider</name>

<enabled>true</enabled>

[...]

</provider>

<!-- Authorization provider -->

<provider>

<role>authorization</role>

<name>XASecurePDPKnox</name>

<enabled>true</enabled>

[...]

</provider>

<!-- HA provider -->

<provider>

<role>ha</role>

<name>HaProvider</name>

<enabled>true</enabled>

[...]

</provider>

</gateway>

<!-- Services -->

<service>

<role>WEBHDFS</role>

<url>http://webhdfs-host:50070/webhdfs</url>

</service>

</topology>Be careful, if you use Apache Ambari, the topologies admin, knoxsso, manager and default must be modified via the web interface, otherwise in case of restart of the service the files will be overwritten.

Provider

Providers add new features (authentication, federation, authorization, identity-assertion, etc) to the gateway that can be used by different services, usually one or more filters that are added to one or more topologies.

The Apache Knox gateway supports federation by adding HTTP header. Federation provides a quick way to implement single sign-on (SSO) by propagating user and group information. Use it only in a highly controlled network environment.

The default authentication provider is Apache Shiro (ShiroProvider). It is used for authentication to an Active Directory or LDAP. For authentication via Kerberos, we will use HadoopAuth.

There are five main identity-assertion providers:

- Default: this is the default provider for easy mapping of names and / or groups of users. It is responsible for establishing the identity passed on to the rest of the cluster;

- Concat: it is the provider that allows the composition of a new name and / or groups of users by concatenating a prefix and / or a suffix;

- SwitchCase: this provider resolves the case where the ecosystem requires a specific case for the names and / or groups of users;

- Regex: it allows incoming identities to be translated using a regular expression;

- HadoopGroupProvider: this provider looks for the user within a group to greatly facilitate the definition of permissions (via Apache Ranger).

In case you use the HadoopGroupProvider provider, you are only required to use groups for setting up permissions (via Apache Ranger), a JIRA (KNOX-821: Identity Assertion Providers must be able to be chained together) was opened to be able to chain together several identity providers.

Services

Services, in turn, add new routing rules to the gateway. The services are located in HIVE service:

-bash-4.1$ ls -lahR hive/

hive/:

total 12K

drwxr-xr-x 3 knox knox 4.0K Nov 16 2017 .

drwxr-xr-x 84 knox knox 4.0K Nov 16 17:07 ..

drwxr-xr-x 2 knox knox 4.0K Nov 16 2017 0.13.0

hive/0.13.0:

total 16K

drwxr-xr-x 2 knox knox 4.0K Nov 16 2017 .

drwxr-xr-x 3 knox knox 4.0K Nov 16 2017 ..

-rw-r--r-- 1 knox knox 949 May 31 2017 rewrite.xml

-rw-r--r-- 1 knox knox 1.1K May 31 2017 service.xmlIn the hive directory, we find the directory 0.13.0 (which corresponds to the version of the service). And inside this folder we find the rewrite.xml and service.xml files.

Personalized services

Let’s assume a REST application available at http://rest-application:8080/api/. Just create a new service and add it to the topology file.

<topology>

[...]

<!-- Services -->

<service>

<role>{{ service_name | upper }}</role>

<url>http://rest-application:8080</url>

</service>

</topology>service.xml file, the value of {{ knox_service_version }} must be equal to the name of the parent folder

<service role="{{ service_name | upper }}" name="{{ service_name | lower }}" version="{{ knox_service_version }}">

<policies>

<policy role="webappsec"/>

<policy role="authentication"/>

<policy role="federation"/>

<policy role="identity-assertion"/>

<policy role="authorization"/>

<policy role="rewrite"/>

</policies>

<routes>

<route path="/{{ service_name | lower }}">

<rewrite apply="{{ service_name | upper }}/{{ service_name | lower }}/inbound/root" to="request.url"/>

</route>

<route path="/{{ service_name | lower }}/**">

<rewrite apply="{{ service_name | upper }}/{{ service_name | lower }}/inbound/path" to="request.url"/>

</route>

<route path="/{{ service_name | lower }}/?**">

<rewrite apply="{{ service_name | upper }}/{{ service_name | lower }}/inbound/args" to="request.url"/>

</route>

<route path="/{{ service_name | lower }}/**?**">

<rewrite apply="{{ service_name | upper }}/{{ service_name | lower }}/inbound/pathargs" to="request.url"/>

</route>

</routes>

</service>rewrite.xml file where we re-write the URL (adding here /api):

<rules>

<rule dir="IN" name="{{ service_name | upper }}/{{ service_name | lower }}/inbound/root" pattern="*://*:*/**/{{ service_name | lower }}">

<rewrite template="{$serviceUrl[{{ service_name | upper }}]}/api/{{ service_name | lower }}"/>

</rule>

<rule dir="IN" name="{{ service_name | upper }}/{{ service_name | lower }}/inbound/path" pattern="*://*:*/**/{{ service_name | lower }}/{path=**}">

<rewrite template="{$serviceUrl[{{ service_name | upper }}]}/api/{{ service_name | lower }}/{path=**}"/>

</rule>

<rule dir="IN" name="{{ service_name | upper }}/{{ service_name | lower }}/inbound/args" pattern="*://*:*/**/{{ service_name | lower }}/?{**}">

<rewrite template="{$serviceUrl[{{ service_name | upper }}]}/api/{{ service_name | lower }}/?{**}"/>

</rule>

<rule dir="IN" name="{{ service_name | upper }}/{{ service_name | lower }}/inbound/pathargs" pattern="*://*:*/**/{{ service_name | lower }}/{path=**}?{**}">

<rewrite template="{$serviceUrl[{{ service_name | upper }}]}/api/{{ service_name | lower }}/{path=**}?{**}"/>

</rule>

</rules>For more details on setting up custom services behind Apache Knox, here is a link to an article.

Tips and tricks

Setting up SSL

# Requirements

$ export KEYALIAS=gateway-identity

$ export PRIVATEKEYALIAS=gateway-identity-passphrase

$ export KEYPASS=4eTTb03v

$ export P12PASS=$KEYPASS

$ export JKSPASS=$KEYPASS

# Save old Keystore

$ mv /usr/hdp/current/knox-server/data/security/keystores /usr/hdp/current/knox-server/data

$ mkdir /usr/hdp/current/knox-server/data/security/keystores

$ chown knox:knox /usr/hdp/current/knox-server/data/security/keystores

$ chmod 755 /usr/hdp/current/knox-server/data/security/keystores

# Generate Knox Master Key

$ /usr/hdp/current/knox-server/bin/knoxcli.sh create-master --force

> Enter master secret: $JKSPASS

> Enter master secret again: $JKSPASS

# Generate the Keystore

$ openssl pkcs12 -export -in /etc/security/.ssl/`hostname -f`.cer -inkey /etc/security/.ssl/`hostname -f`.key -passin env:KEYPASS \

-out /usr/hdp/current/knox-server/data/security/keystores/gateway.p12 -password env:P12PASS -name $KEYALIAS

$ keytool -importkeystore -deststorepass $JKSPASS -destkeypass $KEYPASS \

-destkeystore /usr/hdp/current/knox-server/data/security/keystores/gateway.jks \

-srckeystore /usr/hdp/current/knox-server/data/security/keystores/gateway.p12 \

-srcstoretype PKCS12 -srcstorepass $P12PASS -alias $KEYALIAS

# Import the parents certificates

$ keytool -import -file /etc/security/.ssl/enterprise.cer -alias enterprise \

-keystore /usr/hdp/current/knox-server/data/security/keystores/gateway.jks -storepass $JKSPASS

# Save the private key in the Keystore

$ /usr/hdp/current/knox-server/bin/knoxcli.sh create-alias $PRIVATEKEYALIAS --value $JKSPASSWhen backing up the private key in the keystore, the $PRIVATEKEYALIAS value must be gateway-identity-passphrase.

The password must match the one used when generating the master key (Apache Knox Master secret). That’s why we use the same password everywhere ($JKSPASS).

Common mistakes

First of all, a clean restart of Apache Knox solves come problems and purges the logs before restarting the query that is problematic.

# Stop Apache Knox via Ambari

# Delete topologies and services deployments

$ rm -rf /usr/hdp/current/knox-server/data/deployments/*.topo.*

# Delete JCEKS keystores

$ rm -rf /usr/hdp/current/knox-server/data/security/keystores/*.jceks

# Purge logs

$ rm -rf /var/log/knox/*

# Kill zombie process

$ ps -ef | grep `cat /var/run/knox/gateway.pid`

$ kill -9 `cat /var/run/knox/gateway.pid`

# Start Apache Knox via AmbariBulky answer

If your queries fail quickly and return a 500 error, the request response may be too large (8Kb by default). You will find something like this in the gateway-audit.log file:

dispatch|uri|http://<hostname>:10000/cliservice?doAs=<user>|success|Response status: 500 Just modify the topology to add a parameter in the service in question:

<param name="replayBufferSize" value="32" />Apache Solr

If you have enabled auditing Apache Solr in Apache Ranger (xasecure.audit.destination.solr=true), it is possible that in case of problems with Apache Solr no left space filesystem, Apache Knox will not work anymore.

Apache Hive connections often disconnected via Apache Knox

To correct this problem, you must add to the gateway-site configuration file (these values must be modified according to your environment).

gateway.httpclient.connectionTimeout=600000 (10 min)

gateway.httpclient.socketTimeout=600000 (10 min)

gateway.metrics.enabled=false

gateway.jmx.metrics.reporting.enabled=false

gateway.httpclient.maxConnections=128How to check my deployment?

Apache Knox provides a client to check several things in the deployment of your instance.

Certificate validation

Validate the certificate used by Apache Knox instance, to verify that you have the certificates of your company.

$ openssl s_client -showcerts -connect {{ knoxhostname }}:8443Topology validation

Validation that the description of a cluster (clustername equals the topology) follows the correct formatting rules.

$ /usr/hdp/current/knox-server/bin/knoxcli.sh validate-topology [--cluster clustername] | [-- path "path/to/file"]Authentication and authorization via LDAP

This command tests the ability of a cluster configuration (topology) to authenticate a user with ShiroProvider settings. The --g parameter lists the groups of which a user is a member. The --u and --p parameters are optional, if they are not provided, the terminal will ask you for one.

$ /usr/hdp/current/knox-server/bin/knoxcli.sh user-auth-test [--cluster clustername] [--u username] [--p password] [--g]Topology / LDAP binding

This command tests the ability of a cluster configuration (topology) to authenticate the user provided only with ShiroProvider settings.

$ /usr/hdp/current/knox-server/bin/knoxcli.sh system-user-auth-test [--cluster c] [--d]Gateway test

Using the HTTP Basic access authentication method:

$ export $KNOX_URL=knox.local

$ curl -vik -u admin:admin-password 'https://$KNOX_URL:8443/gateway/default/webhdfs/v1/?op=LISTSTATUS'Using the Kerberos authentication method:

$ export $KNOX_URL=knox.local

$ export $KNOX_USER=knoxuser

$ kdestroy

$ kinit $KNOX_USER

$ curl -i -k --negotiate -X GET -u 'https://$KNOX_URL:8443/gateway/kdefault/webhdfs/v1/?op=LISTSTATUS'In this case, we use the kdefault topology, which uses a HadoopAuth authentication provider.

Direct reading

The /usr/hdp/current/knox-server/data/deployments/ directory contains the different folders corresponding to your different topologies. Whenever you update your topology, a new directory is created based on {{ topologyname }}.topo.{{ timestamp }}.

In the %2F/WEB-INF/ subdirectory you will find the file rewrite.xml which is a concatenation of the topology file and services files. In this file, you can check that your rewrite rules have been taken into account.

$ cd /usr/hdp/current/knox-server/data/deployments/

$ ll

total 0

drwxr-xr-x. 4 knox knox 31 22 janv. 16:04 admin.topo.168760a5c28

drwxr-xr-x. 4 knox knox 31 24 janv. 18:53 admin.topo.16880fef4b8

drwxr-xr-x. 4 knox knox 31 22 janv. 16:04 default.topo.168760a5c28

drwxr-xr-x. 5 knox knox 49 22 janv. 16:04 knoxsso.topo.168760a5c28

drwxr-xr-x. 5 knox knox 49 22 janv. 16:04 manager.topo.15f6b1f2888

$ cd default.topo.168760a5c28/%2F/WEB-INF/

$ ll

total 104

-rw-r--r--. 1 knox knox 70632 22 janv. 16:04 gateway.xml

-rw-r--r--. 1 knox knox 1112 22 janv. 16:04 ha.xml

-rw-r--r--. 1 knox knox 19384 22 janv. 16:04 rewrite.xml

-rw-r--r--. 1 knox knox 586 22 janv. 16:04 shiro.ini

-rw-r--r--. 1 knox knox 1700 22 janv. 16:04 web.xmlApache Knox Ranger plugin debug

This configuration makes it possible to see what is transmitted to Apache Ranger via the Apache Knox plugin. You must modify the gateway-log4j.properties file as below. Restart your Apache Knox instances and check the longs in ranger.knoxagent.log file.

ranger.knoxagent.logger=DEBUG,console,KNOXAGENT

ranger.knoxagent.log.file=ranger.knoxagent.log

log4j.logger.org.apache.ranger=${ranger.knoxagent.logger}

log4j.additivity.org.apache.ranger=false

log4j.appender.KNOXAGENT=org.apache.log4j.DailyRollingFileAppender

log4j.appender.KNOXAGENT.File=${app.log.dir}/${ranger.knoxagent.log.file}

log4j.appender.KNOXAGENT.layout=org.apache.log4j.PatternLayout

log4j.appender.KNOXAGENT.layout.ConversionPattern=%d{ISO8601} %p %c{2}: %m%n %L

log4j.appender.KNOXAGENT.DatePattern=.yyyy-MM-ddImprove response times via Apache Knox

If you have much higher response times through Apache Knox, you can change these different settings in the gateway.site configuration file.

gateway.metrics.enabled=false

gateway.jmx.metrics.reporting.enabled=false

gateway.graphite.metrics.reporting.enabled=falseConclusion

In conclusion, Apache Knox is a powerful tool, with Apache Ranger audits, to filter and audit all access to your environment(s). But it also allows you to be used as a classic gateway in front of your various personalized services by means of configuration. For example, you can add Kerberos authentication in front of your REST APIs that do not have it. Now, it’s up to you to play and make your feedback in the comment section.

Sources

- http://knox.apache.org/books/knox-0-12-0/user-guide.html

- http://knox.apache.org/books/knox-0-12-0/dev-guide.html

- https://fr.slideshare.net/KevinMinder/knox-hadoopsummit20140505v6pub

- https://issues.apache.org/jira/browse/KNOX-821

- https://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.6.3/bk_security/content/configure_ssl_for_knox.html

- https://community.hortonworks.com/content/supportkb/153880/hive-connection-through-knox-disconnects-continuou.html

- https://community.hortonworks.com/articles/114601/how-to-configure-and-troubleshoot-a-knox-topology.html

- https://community.hortonworks.com/articles/113013/how-to-troubleshoot-and-application-behind-apache.html

- https://community.hortonworks.com/content/supportkb/191924/how-to-improve-performance-of-knox-request-per-sec.html

- https://cwiki.apache.org/confluence/display/KNOX/KIP-2+Metrics

- https://issues.apache.org/jira/browse/KNOX-643