Adaltas is a team of consultants with a focus on Open Source, Big Data and distributed systems based in France, Canada and Morocco.

- Architecture, audit and digital transformation

- Cloud and on-premise operation

- Complex application and ingestion pipelines

- Efficient and reliable solutions delivery

Latest articles

Introduction to OpenLineage

Categories: Big Data, Data Governance, Infrastructure | Tags: Atlas, Data Engineering, Infrastructure, Data Lake, Data lakehouse, Data Warehouse, Data lineage

OpenLineage is an open-source specification for data lineage. The specification is complemented by Marquez, its reference implementation. Since its launch in late 2020, OpenLineage has been a presence…

Dec 19, 2023

Installation Guide to TDP, the 100% open source big data platform

Categories: Big Data, Infrastructure | Tags: Infrastructure, VirtualBox, Hadoop, Vagrant, TDP

The Trunk Data Platform (TDP) is a 100% open source big data distribution, based on Apache Hadoop and compatible with HDP 3.1. Initiated in 2021 by EDF, the DGFiP and Adaltas, the project is governed…

By Paul FARAULT

Oct 18, 2023

New TDP website launched

Categories: Big Data | Tags: Programming, Ansible, Hadoop, Python, TDP

The new TDP (Trunk Data Platform) website is online. We invite you to browse its pages to discover the platform, stay informed, and cultivate contact with the TDP community. TDP is a completely open…

By David WORMS

Oct 3, 2023

CDP part 6: end-to-end data lakehouse ingestion pipeline with CDP

Categories: Big Data, Data Engineering, Learning | Tags: NiFi, Business intelligence, Data Engineering, Iceberg, Spark, Big Data, Cloudera, CDP, Data Analytics, Data Lake, Data Warehouse

In this hands-on lab session we demonstrate how to build an end-to-end big data solution with Cloudera Data Platform (CDP) Public Cloud, using the infrastructure we have deployed and configured over…

Jul 24, 2023

CDP part 5: user permissions management on CDP Public Cloud

Categories: Big Data, Cloud Computing, Data Governance | Tags: Ranger, Cloudera, CDP, Data Warehouse

When you create a user or a group in CDP, it requires permissions to access resources and use the Data Services. This article is the fifth in a series of six: CDP part 1: introduction to end-to-end…

Jul 18, 2023

CDP part 4: user management on CDP Public Cloud with Keycloak

Categories: Big Data, Cloud Computing, Data Governance | Tags: EC2, Big Data, CDP, Docker Compose, Keycloak, SSO

Previous articles of the serie cover the deployment of a CDP Public Cloud environment. All the components are ready for use and it is time to make the environment available to other users to explore…

Jul 4, 2023

CDP part 3: Data Services activation on CDP Public Cloud environment

Categories: Big Data, Cloud Computing, Infrastructure | Tags: Infrastructure, AWS, Big Data, Cloudera, CDP

One of the big selling points of Cloudera Data Platform (CDP) is their mature managed service offering. These are easy to deploy on-premises, in the public cloud or as part of a hybrid solution. The…

Jun 27, 2023

CDP part 2: CDP Public Cloud deployment on AWS

Categories: Big Data, Cloud Computing, Infrastructure | Tags: Infrastructure, AWS, Big Data, Cloud, Cloudera, CDP, Cloudera Manager

The Cloudera Data Platform (CDP) Public Cloud provides the foundation upon which full featured data lakes are created. In a previous article, we introduced the CDP platform. This article is the second…

Jun 19, 2023

CDP part 1: introduction to end-to-end data lakehouse architecture with CDP

Categories: Cloud Computing, Data Engineering, Infrastructure | Tags: Data Engineering, Hortonworks, Iceberg, AWS, Azure, Big Data, Cloud, Cloudera, CDP, Cloudera Manager, Data Warehouse

Cloudera Data Platform (CDP) is a hybrid data platform for big data transformation, machine learning and data analytics. In this series we describe how to build and use an end-to-end big data…

By Stephan BAUM

Jun 8, 2023

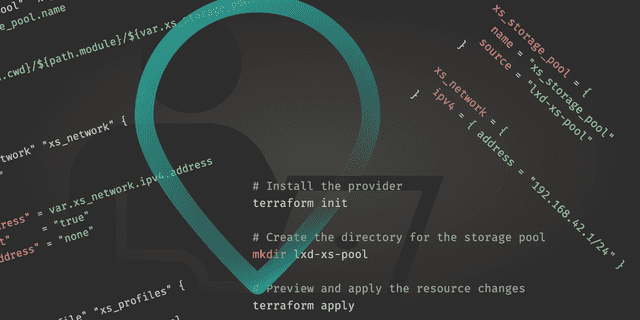

Local development environments with Terraform + LXD

Categories: Containers Orchestration, DevOps & SRE | Tags: Automation, DevOps, KVM, LXD, Virtualization, VM, Terraform, Vagrant

As a Big Data Solutions Architect and InfraOps, I need development environments to install and test software. They have to be configurable, flexible, and performant. Working with distributed systems…

Jun 1, 2023

Data platform requirements and expectations

Categories: Big Data, Infrastructure | Tags: Data Engineering, Data Governance, Data Analytics, Data Hub, Data Lake, Data lakehouse, Data Science

A big data platform is a complex and sophisticated system that enables organizations to store, process, and analyze large volumes of data from a variety of sources. It is composed of several…

By David WORMS

Mar 23, 2023

Keycloak deployment in EC2

Categories: Cloud Computing, Data Engineering, Infrastructure | Tags: Security, EC2, Authentication, AWS, Docker, Keycloak, SSL/TLS, SSO

Why use Keycloak Keycloak is an open-source identity provider (IdP) using single sign-on (SSO). An IdP is a tool to create, maintain, and manage identity information for principals and to provide…

By Stephan BAUM

Mar 14, 2023

Operating Kafka in Kubernetes with Strimzi

Categories: Big Data, Containers Orchestration, Infrastructure | Tags: Kafka, Big Data, Kubernetes, Open source, Streaming

Kubernetes is not the first platform that comes to mind to run Apache Kafka clusters. Indeed, Kafka’s strong dependency on storage might be a pain point regarding Kubernetes’ way of doing things when…

Mar 7, 2023

Kubernetes: debugging with ephemeral containers

Categories: Containers Orchestration, Tech Radar | Tags: Debug, Kubernetes

Anyone who has ever had to manipulate Kubernetes has found himself confronted with the resolution of pod errors. The methods provided for this purpose are efficient, and allow to overcome the most…

Feb 7, 2023

Dive into tdp-lib, the SDK in charge of TDP cluster management

Categories: Big Data, Infrastructure | Tags: Programming, Ansible, Hadoop, Python, TDP

All the deployments are automated and Ansible plays a central role. With the growing complexity of the code base, a new system was needed to overcome the Ansible limitations which will enable us to…

Jan 24, 2023

Adaltas Summit 2022 Morzine

Categories: Big Data, Adaltas Summit 2022 | Tags: Data Engineering, Infrastructure, Iceberg, Container, Data lakehouse, Docker, Kubernetes

For its third edition, the whole Adaltas crew is gathering in Morzine for a whole week with 2 days dedicated to technology the 15th and the 16Th of september 2022. The speakers choose one of the…

By David WORMS

Jan 13, 2023

How to build your OCI images using Buildpacks

Categories: Containers Orchestration, DevOps & SRE | Tags: CNCF, OCI, CI/CD, Docker, Kubernetes

Docker has become the new standard for building your application. In a Docker image we place our source code, its dependencies, some configurations and our application is almost ready to be deployed…

Jan 9, 2023

Big data infrastructure internship

Categories: Big Data, Data Engineering, DevOps & SRE, Infrastructure | Tags: Infrastructure, Hadoop, Big Data, Cluster, Internship, Kubernetes, TDP

Job description Big Data and distributed computing are at the core of Adaltas. We accompagny our partners in the deployment, maintenance, and optimization of some of the largest clusters in France…

By Stephan BAUM

Dec 2, 2022