Multihoming on Hadoop

Mar 5, 2019

- Categories

- Infrastructure

- Tags

- Hadoop

- HDFS

- Kerberos

- Network

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

Multihoming, which means having multiple networks attached to one node, is one of the main components to manage the heterogeneous network usage of an Apache Hadoop cluster. This article is an introduction to the concept and its applications for real-world businesses.

Context

First of, for a general overview on network multihoming on Hadoop be sure to check Matt Foley’s article. It is a great start to understand on a high level what networking in Hadoop is about.

In this blog post, we will cover some of his points and add real-world business use-cases as well as a detailed explanation for Apache HDFS in particular.

What is multihoming?

On complex production environments, servers usually have multiple network interface connectors(NICs), be it for performance, network isolation, business-related purposes or any other reasons tech guys at large corporations might think of.

Sometimes these NICs are bonded together to multiply their throughput, in which case they function as a single high-performance interface. These do not need special network routing configurations as they serve the same network. While in a multi-NIC environment, this cannot be considered a multihoming situation.

Other times each NIC points on a completely different network with a group of hosts that have nothing to do with each other. With a single host attached to multiple networks, this is our first situation of real multihoming. But the choice of the right NIC is then made based on the network of which the remote host is part of, and does not need additional routing configuration either.

However there are times were multiple hosts share multiple networks. By default, the previously explained rule applies and the first NIC connected to a network on which both hosts are members will be chosen. The order in which each NIC is checked is then the deciding factor, and the same network will be chosen for all communication between these two hosts.

In the last presented situation, the default behaviour might not be enough. In Hadoop for example, we might want to divide network communication based on the software components that are generating it or even based on what task they are currently performing. Hadoop has thus to be able to handle these multiple networks and use the right ones for each job.

Why would we want multihoming?

Having multiple networks in production environments enables various governance capabilities. For instance, you might want to use a local dedicated network to your Hadoop cluster and a separate one to communicate with external components. Or within a cluster you want your HDP components to talk to each other on their own network, and have clients access them through another one. Or all of it in case of a disaster recovery architecture, where you have a custom high bandwidth network for data backup jobs between clusters, each of them having their own multi-network architecture to separate intra-components communication and client access.

There might be other reasons, but these are the ones we encountered in our client environments.

Let’s get a bit more precise here. We will limit ourselves to HDFS, in which there are two main use cases business might be in.

Case 1: client-service vs service-service

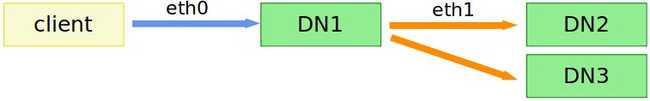

In an hdfs dfs -put task, there are two types of network communications that DataNode hosts will handle.

The first one is between the client (the one who launched the task) and the DataNode on which the fist HDFS block will be written. We will call it the client-service communication for now.

Once the block is written starts the second type of communication between the DataNode host it was just written on and another DataNode host on which a replicate of the block has to be written. This one will be called a service-service communication.

In the previous illustration, the client-service communication is represented by the blue arrow and hosted on the network mapped to the eth0 NIC of the client and the DN1 (DataNode service component) host. The service-service communication is represented by the orange arrow and hosted on the network mapped to the eth1 NIC of each DataNode hosts.

This case is especially useful to separate a user dependent front network on which you might not have control over the workload and a backend network dedicated to critical service to service communications.

Case 2: intra-cluster vs inter-cluster

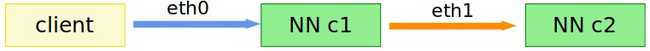

Still talking about HDFS, let’s take the hdfs distcp task as an example this time. It is a common command used to transfer HDFS data from one cluster to another.

The client who launched the command and all HDFS components from each cluster will communicate through up to three different networks:

-

the one between the client and the NameNode and DataNodes of the source cluster

-

the one between the HDFS components of the source cluster and the ones of the destination cluster

-

the one between the HDFS components of the destination cluster

In the previous illustration, we simplified it by taking only into account the communications between the client and both active NameNode of each cluster, and assumed that the command was launched on the source cluster.

To start gathering metadata information from the NameNode of the source cluster, the client will use the intra-cluster network mapped to the eth0 NIC represented by the blue arrow.

Then, to get additional information of the state of the destination cluster, the NameNode of the source cluster will use the inter-cluster network mapped to the eth1 NIC represented by an orange arrow to communicate to its remote counterpart.

This case is very often used for disaster recovery in which two physically remote clusters have each their own internal network to talk with components of the same environment and a separate network for external communications between each other.

What is involved?

This is the last knowledge gathering part before getting to the practical implementation. In the previous section, we explained that multihoming involves the choice of a network for a given communication between two hosts. This is called network resolution.

There are two ways to resolve a network to communicate with a remote host:

- Choice of a NIC (reminder: network interface connector) which is bound to a single network

- Hostname resolution, directly from the local system or through a DNS

NIC-based configurations take various kind of values for different use-cases. To define the identity of a specific host on a given network the value is usually a single IP address like 192.168.100.1. For a group of hosts, this value is extended to an IP range that might look like 192.168.100.1:32.

On the other hand, to open communications from and/or to multiple networks, some configurations can be set to a value like 0.0.0.0 or 0.0.0.0:0. This is used for example to make the NameNode process listen on all NIC of the node it is hosted on, or open a Knox topology to all hosts that share its network.

Sometimes a configuration is specifically designed to define a single NIC for the hosts it is applied on. It then uses directly the name of the NIC as value: eth0, eth1, wlp2s0, virbr0, etc.

In place of an IP address, configurations tend to accept fully qualified hostnames as well. You might then use for example myhost.domain1 and myhost.domain2 to define the communications from and to myhost. Be careful, most Hadoop components do not handle multiple short names for a single host. Using myhost.domain1 and otherhost.domain2 as fully qualified names for the same node is thus not supported!

How do we do it?

Hadoop (HDFS and YARN), Apache HBase, Apache Oozie, and other core Big Data services share a common pattern for their component’s configurations. For simplicity sake, only HDFS NameNode’s configurations will be detailed, but the concept is the same for most components.

The HDFS NameNode component has three separate properties for each way it can interact with and an additional one to differentiate inter-service communications from the others:

dfs.namenode.http*for HTTP communications (obsolete if HTTPS_ONLY enabled) to WebHDFSdfs.namenode.https*for HTTPS communications (obsolete if HTTP_ONLY enabled) to WebHDFSdfs.namenode.rpc*for RPC communications to the NameNodedfs.namenode.servicerpc*for RPC communications to the NameNode from other HDFS components (eg. DataNodes)

If high-availability is enabled, these properties have to be set for each NameNode.

Who am I talking to? aka. -address property

The first group of properties is used by external sources (clients, other service’s components) to know whom to communicate with for a given task. These properties have their protocol name suffixed by -address. Even in an environment without multihoming, these configurations are required.

A highly-available and multihomed HDFS cluster which separates inter-service and defaults RPC communications could have these properties:

dfs.namenode.http-address.namespace.nn1: $nn1_fqdn:50070

dfs.namenode.http-address.namespace.nn2: $nn2_fqdn:50070

dfs.namenode.https-address.namespace.nn1: $nn1_fqdn:50470

dfs.namenode.https-address.$namespace.nn2: $nn2_fqdn:50470

dfs.namenode.rpc-address.$namespace.nn1: $nn1_fqdn:8020

dfs.namenode.rpc-address.$namespace.nn2: $nn2_fqdn:8020

dfs.namenode.servicerpc-address.$namespace.nn1: $nn1_fqdn2:8021

dfs.namenode.servicerpc-address.$namespace.nn2: $nn2_fqdn2:8021Where am I listening? aka. -bind-host property

The second group of properties that is primarily used in a multihomed environment. It is used by a component know on what network to listen on. These properties have their protocol name suffixed by -bind-host.

The same highly-available and multihomed HDFS cluster which separates inter-service and defaults RPC communications could have these properties:

dfs.namenode.http-bind-host.$namespace.nn1: $nn1_fqdn

dfs.namenode.http-bind-host.$namespace.nn2: $nn2_fqdn

dfs.namenode.https-bind-host.$namespace.nn1: $nn1_fqdn

dfs.namenode.https-bind-host.$namespace.nn2: $nn2_fqdn

dfs.namenode.rpc-bind-host.$namespace.nn1: $nn1_fqdn

dfs.namenode.rpc-bind-host.$namespace.nn2: $nn2_fqdn

dfs.namenode.servicerpc-bind-host.$namespace.nn1: $nn1_fqdn2

dfs.namenode.servicerpc-bind-host.$namespace.nn2: $nn2_fqdn2For most use-cases though, the NameNodes should listen to all networks available to them:

dfs.namenode.http-bind-host.$namespace.nn1: 0.0.0.0

dfs.namenode.http-bind-host.$namespace.nn2: 0.0.0.0

dfs.namenode.https-bind-host.$namespace.nn1: 0.0.0.0

dfs.namenode.https-bind-host.$namespace.nn2: 0.0.0.0

dfs.namenode.rpc-bind-host.$namespace.nn1: 0.0.0.0

dfs.namenode.rpc-bind-host.$namespace.nn2: 0.0.0.0

dfs.namenode.servicerpc-bind-host.$namespace.nn1: 0.0.0.0

dfs.namenode.servicerpc-bind-host.$namespace.nn2: 0.0.0.0Other properties involved

To complete a configuration set for a multihomed HDFS cluster, some additional properties may be used.

To communicate with HDFS components, most processes tend to ask the NameNode for information. This includes hostname resolution to communicate to another NameNode or a DataNode. But sometimes DataNodes and clients should use their own hostname resolution instead of the one of the NameNode. To achieve this, the following properties must be set to true:

dfs.client.use.datanode.hostname=truefor the client to resolve its DNS lookup locallydfs.datanode.use.datanode.hostname=truefor the DataNodes to resolve their DNS lookup locally

Hadoop’s security configurations also include properties to override the default behavior of DNS lookup by forcing a NIC or a given nameserver:

hadoop.security.dns.interface=eth0to lock DNS lookup to a set of NIC (separated by a comma)hadoop.security.dns.nameserver=192.168.101.1to force the use of a given DNS