Policy enforcing with Open Policy Agent

Jan 22, 2020

- Categories

- Cyber Security

- Data Governance

- Tags

- Kafka

- Ranger

- Authorization

- Cloud

- Kubernetes

- REST

- SSL/TLS [more][less]

Never miss our publications about Open Source, big data and distributed systems, low frequency of one email every two months.

Open Policy Agent is an open-source multi-purpose policy engine. Its main goal is to unify policy enforcement across the cloud native stack. The project was created by Styra and it is currently incubating at the Cloud Native Computing Foundation. It is used at Netflix, SAP and Cloudflare among others.

Let’s see how Open Policy Agent works and how we can leverage it to implement security policies in Kafka, Kubernetes, and in a custom application.

Architecture

Open Policy Agent (OPA) is expected to run next to your applications as a host-level daemon. The main advantage of OPA running locally with your app is that it allows fast decision making and availability as it does not rely on an external service.

The decision making process with OPA is the following:

- The application receives a request

- The application translates this request to a JSON object

- The application sends the JSON object to OPA

- OPA evaluates the query object against the defined policies

- OPA sends the decision (allow or not allow) in a JSON object to the Application

- Application proceeds (or not) to fulfil the request, the policy is enforced

Most of the time, OPA will run as a daemon and be queried over REST protocol. For applications written in Go, OPA can be used as a library with the package github.com/open-policy-agent/opa/rego.

Policy decoupling

Open Policy Agent articulates around the concept of policy decoupling. It can be described as the necessity for software to be able to enforce external, easily modifiable and declarative policies without having to recompile or redeploy any component of the software.

OPA is environment agnostic. It means that it can be used for pretty much everything as long as you implement the logic in your app and find a way to describe its ins and outs of using the Rego policy language. If you are aware of the Big Data ecosystem, think of Open Policy Agent as Apache Ranger for everything.

At the time of writing, there are a dozen implementations of OPA available for various services: Kafka, Docker, SSH, sudo, etc. Some of these implementations are available here. The implementation for Kubernetes is a bit special and it is published as a side project called Gatekeeper.

Rego policy language

Rego is a high-level declarative language used in OPA to express policies. It is built to be easy to read and write.

An OPA policy boils down to “user U can/cannot do operation O“.

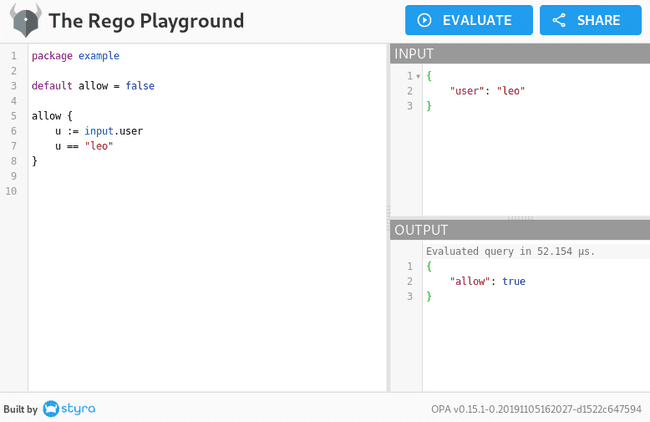

Here is an example of a very simple policy that denies every user except for leo:

package example

default allow = false

allow {

u := input.user

u == "leo"

}Rego supports variables, nested documents, strings, numerals, expression logic, comparisons, etc. For a quick overview of Rego’s abilities, the cheat sheet is a good place to start.

Styra built a nice tool called Rego Playground to easily test policies.

Here is our previous policy being tested through this tool:

We will encounter various policy definitions throughout this article.

Open Policy Agent with Apache Kafka

In this part, we will see how to enforce security policies in Apache Kafka with OPA. For the sake of simplicity, the demo will be a single node installation. Also, the Kafka Broker won’t be secure, thus there is no notion of identity.

First things first let’s start by downloading and installing Open Policy Agent.

mkdir /opt/opa && cd /opt/opa

curl -L -o opa https://openpolicyagent.org/downloads/latest/opa_darwin_amd64

chmod 755 ./opaWe will also create a policy that denies everything by default:

mkdir -p data/kafka/authz

cat <<EOF > data/kafka/authz/allow.rego

package kafka.authz

default allow = false

EOFWe can now start our Open Policy Agent in Server mode:

./opa run --server --watch data/Now we will download and install the latest version of Kafka.

curl http://apache.crihan.fr/dist/kafka/2.3.1/kafka_2.12-2.3.1.tgz -o /tmp/kafka_2.12-2.3.1.tgz

tar -xvzf /tmp/kafka_2.12-2.3.1.tgz -C /optNext we will clone the sources for Open Policy Agent’s contrib repo and build it again our version of Apache Kafka.

git clone https://github.com/open-policy-agent/contrib

cd kafka_authorizer

mvn installBy default it is compiled for Kafka 1.0.0, we need to change kafka_authorizer/pom.xml to make it compatible with our version of Kafka.

The following compiled JARs need to be placed in the lib path of Kafka:

cp target/kafka-authorizer-opa-1.0.jar /opt/kafka_2.12-2.3.1/libs/

cp target/kafka-authorizer-opa-1.0-package/share/java/kafka-authorizer-opa/gson-2.8.2.jar /opt/kafka_2.12-2.3.1/libs/We will keep all of Kafka’s broker configurations by default and just add the properties to make OPA the authorizer authority for Kafka. We will configure it to link to the policy we just created.

cd /opt/kafka_2.12-2.3.1

cat <<EOF >> config/server.properties

###################### OPA Properties ######################

authorizer.class.name: com.lbg.kafka.opa.OpaAuthorizer

opa.authorizer.url=http://localhost:8181/v1/data/kafka/authz/allow

opa.authorizer.allow.on.error=false

opa.authorizer.cache.initial.capacity=100

opa.authorizer.cache.maximum.size=100

opa.authorizer.cache.expire.after.ms=600000

EOFWe can start Apache ZooKeeper and then the Kafka broker:

bin/zookeeper-server-start.sh config/zookeeper.properties

bin/kafka-server-start.sh config/server.propertiesLet’s try to create a topic and see what happens:

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic MyTopic

Error while executing topic command : org.apache.kafka.common.errors.TopicAuthorizationException: Not authorized to access topics: [Authorization failed.]

[2019-12-07 17:50:56,727] ERROR java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.TopicAuthorizationException: Not authorized to access topics: [Authorization failed.]

at org.apache.kafka.common.internals.KafkaFutureImpl.wrapAndThrow(KafkaFutureImpl.java:45)

at org.apache.kafka.common.internals.KafkaFutureImpl.access$000(KafkaFutureImpl.java:32)

at org.apache.kafka.common.internals.KafkaFutureImpl$SingleWaiter.await(KafkaFutureImpl.java:89)

at org.apache.kafka.common.internals.KafkaFutureImpl.get(KafkaFutureImpl.java:260)

at kafka.admin.TopicCommand$AdminClientTopicService.createTopic(TopicCommand.scala:190)

at kafka.admin.TopicCommand$TopicService.createTopic(TopicCommand.scala:149)

at kafka.admin.TopicCommand$TopicService.createTopic$(TopicCommand.scala:144)

at kafka.admin.TopicCommand$AdminClientTopicService.createTopic(TopicCommand.scala:172)

at kafka.admin.TopicCommand$.main(TopicCommand.scala:60)

at kafka.admin.TopicCommand.main(TopicCommand.scala)

Caused by: org.apache.kafka.common.errors.TopicAuthorizationException: Not authorized to access topics: [Authorization failed.]

(kafka.admin.TopicCommand$)As enforced by our strict “deny all” policy, we are not able to create a topic. Let’s what happens if we try again after modifying the policy to allow everything:

cat <<EOF > /opt/opa/data/kafka/authz/allow.rego

package kafka.authz

default allow = true

EOF

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic MyTopicIt worked! The topic was created because OPA enforced the policy. But our policy is a little too simple, let’s create a more complex one to enforce the following policy:

Producing is only allowed in topic test_project. All producers trying to write to another topic have to be blocked. Every other actions on any topic can be performed..

package kafka.authz

default allow = false

allow {

not deny

}

deny {

is_produce_operation

not is_topic_test_project

}

is_produce_operation {

input.operation.name == "Write"

}

is_topic_test_project {

input.resource.name == "test_project"

}With this policy we can do every operations on every topics except producing messages which is only accepted in the test_project topic:

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic project

>This should be blocked

>[2019-12-09 13:59:10,486] ERROR Error when sending message to topic test with key: null, value: 22 bytes with error: (org.apache.kafka.clients.producer.internals.ErrorLoggingCallback)

org.apache.kafka.common.errors.TopicAuthorizationException: Not authorized to access topics: [project]

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test_project

>This should be allowed

>SUCCESS!Now you know how to use OPA to implement access control policies on Kafka resources!

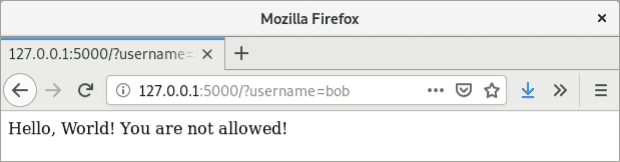

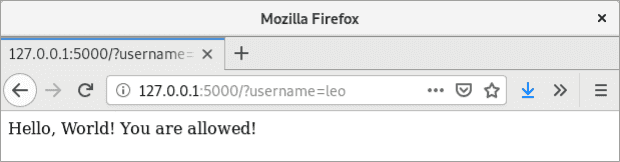

What about OPA with my application?

With Open Policy Agent’s REST API, it is particularly easy to integrate it with your custom applications. The goal is to have simple, portable, centralized access control to your application without reinventing the wheel.

For this demo I built a simple Flask WebApp:

from flask import Flask

from flask import request

import requests

app = Flask(__name__)

@app.route('/')

def hello_world():

allowed = False

username = request.args.get('username')

r = requests.post('http://master01.metal.ryba:8181/v1/data/myapp/auth/allow', json={"input": {"user": username}})

allowed = r.json()['result']

if allowed == True:

return 'Hello, World! You are allowed!'

else:

return 'Hello, World! You are not allowed!'As we can see in the code, the authorization policy is deferred to Open Policy Agent which is running on host master01.metal.ryba on port 8181. This application can be started with:

export FLASK_APP=myapp.py

flask run The policy to make the decision is defined as:

mkdir -p data/myapp/auth

cat <<EOF > data/myapp/auth/allow.rego

package myapp.auth

default allow = false

allow {

user := input.user

user == "leo"

}

EOFThe enforced rule is quite simple. It should show if a user is allowed or not depending on the provided username parameter. Let’s try it:

curl http://127.0.0.1:5000/

Hello, World! You are not allowed!

curl http://127.0.0.1:5000?username=leo

Hello, World! You are allowed!Looks good.

What’s next?

We have seen what Open Policy Agent is, how it works and how to ingrate it with Apache Kafka or in a custom application. In a follow up article, we will be testing the integration of OPA with Kubernetes.