Published articles

New TDP website launched

Categories: Big Data | Tags: Programming, Ansible, Hadoop, Python, TDP

The new TDP (Trunk Data Platform) website is online. We invite you to browse its pages to discover the platform, stay informed, and cultivate contact with the TDP community. TDP is a completely open…

By David WORMS

Oct 3, 2023

Data platform requirements and expectations

Categories: Big Data, Infrastructure | Tags: Data Engineering, Data Governance, Data Analytics, Data Hub, Data Lake, Data lakehouse, Data Science

A big data platform is a complex and sophisticated system that enables organizations to store, process, and analyze large volumes of data from a variety of sources. It is composed of several…

By David WORMS

Mar 23, 2023

Adaltas Summit 2022 Morzine

Categories: Big Data, Adaltas Summit 2022 | Tags: Data Engineering, Infrastructure, Iceberg, Container, Data lakehouse, Docker, Kubernetes

For its third edition, the whole Adaltas crew is gathering in Morzine for a whole week with 2 days dedicated to technology the 15th and the 16Th of september 2022. The speakers choose one of the…

By David WORMS

Jan 13, 2023

Traefik, Docker and dnsmasq to simplify container networking

Categories: Containers Orchestration, Infrastructure, Tech Radar | Tags: DNS, Gatsby, JAMstack, Linux, Docker, Network

Good tech adventures start with some frustration, a need, or a requirement. This is the story of how I simplified the management and access of my local web applications with the help of Traefik and…

By David WORMS

Nov 17, 2022

Nix package creation: install a not yet supported font

Categories: Hack | Tags: Learning and tutorial, Linux, Packaging, GitOps, NixOS, Open source

The Nix packages collection is large with over 60 000 packages. However, chances are that sometimes the package you need is not available. You must integrate it yourself. I needed for some fonts which…

By David WORMS

Mar 29, 2022

JS monorepos in prod 6: CI/CD, continuous integration and deployment with Travis CI

Categories: DevOps & SRE, Front End | Tags: CI/CD, Monorepo, Node.js, Unit tests

Implementing continuous integration CI and continuous deployment (CD) on a monorepo is quite complex due to the diversity of multiple responsibilities between developers and the need to coordinate…

By David WORMS

Dec 6, 2021

Spring 2022 internship - building a Data Lab

Categories: Data Science, Learning | Tags: MongoDB, Spark, Argo CD, Elasticsearch, Internship, Keycloak, Kubernetes, OpenID Connect, PostgreSQL

Job Description Over the last few years, we developed the ability to use computers to process large amounts of data. The ecosystem evolved over a large offering of tools and libraries and the creation…

By David WORMS

Nov 24, 2021

CSV package for Node.js version 6

Categories: Node.js | Tags: Data Engineering, Refactoring, CSV, File Format, Release and features

Version 6 of the package for Node.js is released along its sub projects. Here are the latest versions: version , latest version was NPM version , latest version was NPM version , latest version…

By David WORMS

Nov 15, 2021

Internship in Data Engineering

Categories: Front End, Learning | Tags: Metrics, Monitoring, Hive, Kafka, Delta Lake, Elasticsearch, IaC, Internship, Kubernetes, Streaming

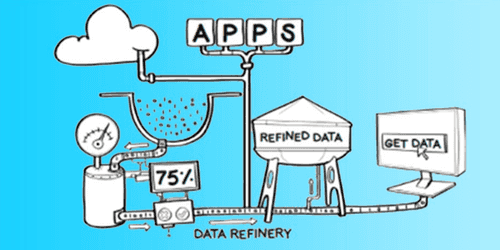

Job Description Data is a valuable business asset. Some call it the new oil. The data engineer collects, transform and refine raw data into information that can be used by business analysts and data…

By David WORMS

Oct 25, 2021

Internship in Web Technologies

Categories: Front End, Learning | Tags: DevOps, LDAP, React.js, CI/CD, Docker, GraphQL, IaC, Internship, Kubernetes, Node.js, OAuth2

Job Description As part of its Big Data activities, Adaltas Academy is an information-sharing platform bringing together articles, training content, and a knowledge base. The users of the platform are…

By David WORMS

Oct 14, 2021

Adaltas Summit 2021, 2nd edition in corsica

Categories: Adaltas Summit 2021, Learning | Tags: Ansible, Hadoop, Spark, Azure, Blockchain, Deep Learning, Docker, Terraform, Kubernetes, Node.js

For its second edition, the whole Adaltas crew is gathering in Corsica for a whole week with 2 days dedicated to technology the 23rd and the 24th of september 2021. After a year and a half of sanitary…

By David WORMS

Sep 21, 2021

Running your Travis CI builds locally with Docker

Categories: DevOps & SRE, Front End | Tags: Bash, Tools, CI/CD, Node.js, Unit tests

Setting up the environment to run the tests on a CI/CD can take a few roundtrips between your host machine and the CI/CD running remotely. For every attempt, you’ll have to commit and publish your…

By David WORMS

Sep 6, 2021

Desacralizing the Linux overlay filesystem in Docker

Categories: Containers Orchestration, Infrastructure | Tags: DevOps, File system, Linux, Docker

Overlay filesystems (also called union filesystems) is a fundamental technology in Docker to create images and containers. They allow creating a union of directories to create a filesystem. Multiple…

By David WORMS

Jun 3, 2021

Bridging the DBnomics Swagger/OpenAPI schema with GraphQL

Categories: DevOps & SRE, Front End | Tags: Data Engineering, JAMstack, GraphQL, JavaScript, Node.js, REST, Schema

While redacting a long and fastidious document today, I came across DBnomics, an open platform federating economic datasets. Browsing its website and APIs, I found their OpenAPI schema (aka Swagger…

By David WORMS

Apr 8, 2021

JS monorepos in prod 4: unit testing with Mocha and Should.js

Categories: DevOps & SRE, Front End | Tags: Automation, CI/CD, Git, GitOps, Monorepo, Node.js, Unit tests

Unit testing is essential for every long-term project and allows you to pull down functionalities of your code into isolated testable units. Indeed the main goal of a unit test is to verify if an…

By David WORMS

Feb 25, 2021

JS monorepos in prod 3: commit enforcement and changelog generation

Categories: DevOps & SRE, Front End | Tags: CI/CD, Git, JavaScript, Monorepo, Node.js, Release and features, Unit tests

Conventional Commits introduces a structured format for commit messages. It standardizes the messages among all the contributors. This makes them more readable and easy to automate. It simplifies the…

By David WORMS

Feb 2, 2021

JS monorepos in prod 2: project versioning and publishing

Categories: DevOps & SRE, Front End | Tags: CI/CD, Git, GitOps, JavaScript, Monorepo, Node.js, Release and features, Unit tests

One great advantage of a monorepo is to maintain coherent versions between packages and to automatize the version creation and the publication of packages. This article covers the versioning and…

By David WORMS

Jan 11, 2021

JS monorepos in prod 1: project initialization

Categories: DevOps & SRE, Front End | Tags: Git, GitOps, JavaScript, Monorepo, Node.js, Release and features

Every project journey begins with the step of initialization. When your overall project is composed of multiple projects, it is tempting to create one Git repository per project. In Node.js, a project…

By David WORMS

Jan 5, 2021

OAuth2 and OpenID Connect for microservices and public applications (Part 2)

Categories: Containers Orchestration, Cyber Security | Tags: CNCF, LDAP, Micro Services, JavaScript Object Notation (JSON), OAuth2, OpenID Connect

Using OAuth2 and OpenID Connect, it is important to understand how the authorization flow is taking place, who shall call the Authorization Server, how to store the tokens. Moreover, microservices and…

By David WORMS

Nov 20, 2020

OAuth2 and OpenID Connect, a gentle and working introduction (Part 1)

Categories: Containers Orchestration, Cyber Security | Tags: CNCF, Go Lang, JAMstack, LDAP, Kubernetes, OAuth2, OpenID Connect

Understanding OAuth2, OpenID and OpenID Connect (OIDC), how they relate, how the communications are established, and how to architecture your application with the given access, refresh and id tokens…

By David WORMS

Nov 17, 2020

Plugin architecture in JavaScript and Node.js with Plug and Play

Categories: Front End, Node.js | Tags: Asynchronous, DevOps, Programming, Agile, JavaScript, Open source, Release and features

Plug and Play helps library and application authors to introduce a plugin architecture into their code. It simplifies complex code execution with well-defined interception points, also called hooks…

By David WORMS

Aug 28, 2020

Cloudera CDP and Cloud migration of your Data Warehouse

Categories: Big Data, Cloud Computing | Tags: Azure, Cloudera, Data Hub, Data Lake, Data Warehouse

While one of our customer is anticipating a move to the Cloud and with the recent announcement of Cloudera CDP availability mi-september during the Strata conference, it seems like the appropriate…

By David WORMS

Dec 16, 2019

Internship Data Science & Data Engineer - ML in production and streaming data ingestion

Categories: Data Engineering, Data Science | Tags: Flink, DevOps, Hadoop, HBase, Kafka, Spark, Internship, Kubernetes, Python

Context The exponential evolution of data has turned the industry upside down by redefining data storage, processing and data ingestion pipelines. Mastering these methods considerably facilitates…

By David WORMS

Nov 26, 2019

InfraOps & DevOps Internship - build a Big Data & Kubernetes PaaS

Categories: Big Data, Containers Orchestration | Tags: DevOps, LXD, Hadoop, Kafka, Spark, Ceph, Internship, Kubernetes, NoSQL

Context The acquisition of a high-capacity cluster is in line with Adaltas’ desire to build a PAAS-type offering to use and to provide Big Data and container orchestration platforms. The platforms are…

By David WORMS

Nov 26, 2019

Kerberos and Spnego authentication on Windows with Firefox

Categories: Cyber Security | Tags: Firefox, HTTP, Kerberos, FreeIPA

In Greek mythology, Kerberos, also called Cerberus, guards the gates of the Underworld to prevent the dead from leaving. He is commonly described as a three-headed dog, a serpent’s tail, mane of…

By David WORMS

Nov 4, 2019

Notes on the Cloudera Open Source licensing model

Categories: Big Data | Tags: CDSW, License, Cloudera Manager, Open source

Following the publication of its Open Source licensing strategy on July 10, 2019 in an article called “our Commitment to Open Source Software”, Cloudera broadcasted a webinar yesterday October 2…

By David WORMS

Oct 25, 2019

Innovation, project vs product culture in Data Science

Categories: Data Science, Data Governance | Tags: DevOps, Agile, Scrum

Data Science carries the jobs of tomorrow. It is closely linked to the understanding of the business usecases, the behaviors and the insights that will be extracted from existing data. The stakes are…

By David WORMS

Oct 8, 2019

Gatsby.js, React and GraphQL for documentation websites

Categories: Adaltas Summit 2018, Front End | Tags: Gatsby, HTTP, JAMstack, React.js, SEO, API, GitOps, GraphQL, JavaScript, Markdown, Node.js

In the last few months, I have started to redesign some of our Open Source project websites. This includes the websites of the Node.js CSV project, the Node.js HBase client and the Nikita project, our…

By David WORMS

Apr 1, 2019

Main advantages of GraphQL as an alternative to REST

Categories: Front End | Tags: gRPC, API, GraphQL, JavaScript Object Notation (JSON), Node.js, Registry, REST

GraphQL is based on a simple idea, moving the assembly of a request from the server to the client. The client sees the overall strongly-typed schema instead of multiple REST endpoints and he builds…

By David WORMS

Nov 27, 2018

Node.js CSV version 4 - re-writing and performance

Categories: Node.js | Tags: CLI, Data Engineering, Refactoring, CSV, Release and features

Today, we release a new major version of the Node.js CSV parser project. Version 4 is a complete re-writing of the project focusing on performance. It also comes with new functionalities as well as…

By David WORMS

Nov 19, 2018

Managing User Identities on Big Data Clusters

Categories: Cyber Security, Data Governance | Tags: Kerberos, LDAP, Active Directory, Ansible, FreeIPA, IAM

Securing a Big Data Cluster involves integrating or deploying specific services to store users. Some users are cluster-specific when others are available across all clusters. It is not always easy to…

By David WORMS

Nov 8, 2018

One week to discuss technology in a Moroccan riad

Categories: Adaltas Summit 2018, Learning | Tags: Flink, CDSW, Gatsby, React.js, Hadoop, Knox, Data Science, Deep Learning, Kubernetes, Node.js

Adaltas organise the year its first conference between the 22 and 26 of October. On the agenda of these 5 days of conference: discuss technology in one of the most beautiful riad of Marrakech. Mix the…

By David WORMS

Oct 11, 2018

Deploying a secured Flink cluster on Kubernetes

Categories: Big Data | Tags: Flink, Encryption, Kerberos, HDFS, Kafka, Elasticsearch, SSL/TLS

When deploying secured Flink applications inside Kubernetes, you are faced with two choices. Assuming your Kubernetes is secure, you may rely on the underlying platform or rely on Flink native…

By David WORMS

Oct 8, 2018

Data Lake ingestion best practices

Categories: Big Data, Data Engineering | Tags: NiFi, Data Governance, HDF, Operation, Avro, Hive, ORC, Spark, Data Lake, File Format, Protocol Buffers, Registry, Schema

Creating a Data Lake requires rigor and experience. Here are some good practices around data ingestion both for batch and stream architectures that we recommend and implement with our customers…

By David WORMS

Jun 18, 2018

Essential questions about Time Series

Categories: Big Data | Tags: Grafana, Druid, HBase, Hive, ORC, Data Science, Elasticsearch, IOT

Today, the bulk of Big Data is temporal. We see it in the media and among our customers: smart meters, banking transactions, smart factories, connected vehicles … IoT and Big Data go hand in hand. We…

By David WORMS

Mar 18, 2018

Publishing guidelines

Categories: DevOps & SRE | Tags: Arch Linux, KVM, VM, Vagrant, Markdown

This is as much a set of guidelines targeting everyone publishing content on the web as rules for reviewers to ensure no validation is forgotten before submitting for publication. It mostly targets…

By David WORMS

Feb 28, 2018

Notes after Katacoda Training on Kubernetes Container Orchestration

Categories: Containers Orchestration, Learning | Tags: Helm, Ingress, Kubeadm, CNI, Micro Services, Minikube, Kubernetes

A few weeks ago, I dedicated two days to follow the turorials available on Katacoda, the interactive learning platform for Kubernetes or any other container orchestration platform. I’m sharing my…

By David WORMS

Dec 14, 2017

Micro Services

Categories: Cloud Computing, Containers Orchestration, Open Source Summit Europe 2017 | Tags: Mesos, CNCF, DNS, Encryption, gRPC, Istio, Linkerd, Micro Services, MITM, Service Mesh, Kubernetes, Proxy, SPOF, SSL/TLS

Back in the days, applications were monolithic and we could use an IP address to access a service. With virtual machines (VM), multiple hosts started to appear on the same machine with multiple apps…

By David WORMS

Nov 14, 2017

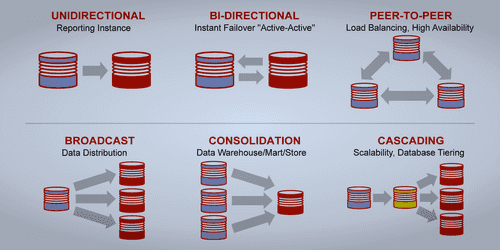

MariaDB integration with Hadoop

Categories: Infrastructure | Tags: Database, HA, MariaDB, Hadoop, Hive

During a workshop with one of our customers, Adaltas has identified a potential risk to use MariaDB’s High Availability (HA) strategy. Since the customer selected Cloudera’s CDH 5 distribution, the…

By David WORMS

Jul 31, 2017

Oracle DB synchrnozation to Hadoop with CDC

Categories: Data Engineering | Tags: CDC, GoldenGate, Oracle, Hive, Sqoop, Data Warehouse

This note is the result of a discussion about the synchronization of data written in a database to a warehouse stored in Hadoop. Thanks to Claude Daub from GFI who wrote it and who authorizes us to…

By David WORMS

Jul 13, 2017

Hive Metastore HA with DBTokenStore: Failed to initialize master key

Categories: Big Data, DevOps & SRE | Tags: Infrastructure, Hive, Bug

This article describes my little adventure around a startup error with the Hive Metastore. It shall be reproducable with any secure installation, meaning with Kerberos, with high availability enabled…

By David WORMS

Jul 21, 2016

EclairJS - Putting a Spark in Web Apps

Categories: Data Engineering, Front End | Tags: Jupyter, Spark, JavaScript

Presentation by David Fallside from IBM, images extracted from the presentation. Introduction Web Apps development has moved from Java to NodeJS and Javascript. It provides a simple and rich…

By David WORMS

Jul 17, 2016

Hive, Calcite and Druid

Categories: Big Data | Tags: Business intelligence, Database, Druid, Hadoop, Hive

BI/OLAP requires interactive visualization of complex data streams: Real time bidding events User activity streams Voice call logs Network trafic flows Firewall events Application KPIs Traditionnal…

By David WORMS

Jul 14, 2016

Red Hat Storage Gluster and its integration with Hadoop

Categories: Big Data | Tags: GlusterFS, Red Hat, Hadoop, HDFS, Storage

I had the opportunity to be introduced to Red Hat Storage and Gluster in a joint presentation by Red Hat France and the company StartX. I have here recompiled my notes, at least partially. I will…

By David WORMS

Jul 3, 2015

A simple connect middleware to transpile CoffeeScript files

Categories: Hack, Node.js | Tags: Tools, CoffeeScript, Node.js

This new module called connect-coffee-script is a Connect middleware used to serve JavaScript files written in CoffeeScript. This middleware is to be used by connect or any Connect compatible…

By David WORMS

Jul 4, 2014

Tutorial for creating and publishing a new Node.js module

Categories: Front End | Tags: Learning and tutorial, License, Mocha, NPM, Travis CI, CoffeeScript, GitHub, JavaScript, Node.js, Unit tests

In this tutorial, I provide complete instructions for creating a new Node.js module, writing the code in coffee-script, publishing it on GitHub, sharing it with other Node.js fellows through NPM…

By David WORMS

Dec 3, 2013

Crawl you website including login form with Phantomjs

Categories: Front End | Tags: Mocha, CoffeeScript, JavaScript, Node.js, Unit tests

With PhantomJS, we start a headless WebKit and pilot it with our own scripts. Said differently, we write a script in JavaScript or CoffeeScript which controls an Internet browser and manipulates the…

By David WORMS

Nov 27, 2013

Catch 'uncaughtException' error in your mocha test

Categories: Node.js | Tags: DevOps, Mocha, JavaScript, Unit tests

This isn’t the first time I faced this situation. Today, I finally found the time and energy to look for a solution. In your mocha test, let’s say you need to test an expected “uncaughtException…

By David WORMS

Oct 27, 2013

Remote connection with SSH

Categories: Cyber Security | Tags: Automation, HTTP, SSH

While teaching Big Data and Hadoop, a student asks me about SSH and how to use. I’ll discuss about the protocol and the tools to benefit from it. Lately, I automate the deployment of Hadoop clusters…

By David WORMS

Oct 2, 2013

Composants for CDH and HDP

Categories: Big Data | Tags: Flume, Hortonworks, Hadoop, Hive, Oozie, Sqoop, Zookeeper, Cloudera, CDH, HDP

I was interested to compare the different components distributed by Cloudera and HortonWorks. This also gives us an idea of the versions packaged by the two distributions. At the time of this writting…

By David WORMS

Sep 22, 2013

Splitting HDFS files into multiple hive tables

Categories: Data Engineering | Tags: Flume, Pig, HDFS, Hive, Oozie, SQL

I am going to show how to split a CSV file stored inside HDFS as multiple Hive tables based on the content of each record. The context is simple. We are using Flume to collect logs from all over our…

By David WORMS

Sep 15, 2013

About the new BSD license and its difference with other BSD licenses

Categories: Data Governance | Tags: License, Open source

As a non restrictive Open Source license, the “new BSD license” is a commonly used license across the Node.js community. However, this is only one of the BSD license available along the original “BSD…

By David WORMS

Aug 8, 2013

Kerberos and delegation tokens security with WebHDFS

Categories: Cyber Security | Tags: HTTP, Kerberos, HDFS, Big Data

WebHDFS is an HTTP Rest server bundle with the latest version of Hadoop. What interests me on this article is to dig into security with the Kerberos and delegation tokens functionalities. I will cover…

By David WORMS

Jul 25, 2013

Testing the Oracle SQL Connector for Hadoop HDFS

Categories: Data Engineering | Tags: Database, File system, Oracle, HDFS, CDH, SQL

Using Oracle SQL Connector for HDFS, you can use Oracle Database to access and analyze data residing in HDFS files or a Hive table. You can also query and join data in HDFS or a Hive table with other…

By David WORMS

Jul 15, 2013

Maven 3 behind a proxy

Categories: Hack | Tags: Maven, Java, Proxy

Maven 3 isn’t so different to it’s previous version 2. You will migrate most of your project quite easily between the two versions. That wasn’t the case a fews years ago between versions 1 and…

By David WORMS

Jul 11, 2013

Node CSV version 0.2.7

Categories: Hack | Tags: Pipeline, CoffeeScript, CSV, Node.js

While I’m release version 0.2.7 of the CSV parser for Node.js, I stop here to drop a few lines of what has made into this release. We are now using the latest CoffeeScript, which is version 1.4.…

By David WORMS

Jul 9, 2013

State of the Hadoop open-source ecosystem in early 2013

Categories: Big Data | Tags: Flume, Mesos, Phoenix, Pig, Hadoop, Kafka, Mahout, Data Science

Hadoop is already a large ecosystem and my guess is that 2013 will be the year where it grows even larger. There are some pieces that we no longer need to present. ZooKeeper, hbase, Hive, Pig, Flume…

By David WORMS

Jul 8, 2013

Oracle and Hive, how data are published?

Categories: Big Data | Tags: Oracle, Hive, Sqoop, Data Lake

In the past few days, I’ve published 3 related articles: a first one covering the option to integrate Oracle and Hadoop, a second one explaining how to install and use the Oracle SQL Connector with…

By David WORMS

Jul 6, 2013

Oracle to Apache Hive with the Oracle SQL Connector

Categories: Business Intelligence | Tags: Oracle, HDFS, Hive, Network

In a previous article published last week, I introduced the choices available to connect Oracle and Hadoop. In a follow up article, I covered the Oracle SQL Connector, its installation and integration…

By David WORMS

May 27, 2013

Options to connect and integrate Hadoop with Oracle

Categories: Data Engineering | Tags: Database, Java, Oracle, R, RDBMS, Avro, HDFS, Hive, MapReduce, Sqoop, NoSQL, SQL

I will list the different tools and libraries available to us developers in order to integrate Oracle and Hadoop. The Oracle SQL Connector for HDFS described below is covered in a follow up article…

By David WORMS

May 15, 2013

The state of Hadoop distributions

Categories: Big Data | Tags: Hortonworks, Intel, Oracle, Hadoop, Cloudera

Apache Hadoop is of course made available for download on its official webpage. However, downloading and installing the several components that make a Hadoop cluster is not an easy task and is a…

By David WORMS

May 11, 2013

Apache Hive Essentials How-to by Darren Lee

Categories: Business Intelligence, Learning | Tags: UDF, Hadoop, Hive, File Format, SQL

Recently, I’ve been ask to review a new book on Apache Hive called “Apache Hive Essentials How-to” (edit: the second edition is now available) written by Darren Lee and published by Packt Publishing…

By David WORMS

Apr 23, 2013

Hadoop development cluster of virtual machines with static IP using VirtualBox

Categories: Infrastructure | Tags: Ambari, Hortonworks, Red Hat, VirtualBox, VM, VMware, Cloudera, Network

A few days ago, I explained how to set up a cluster of virtual machine with static IPsand Internet access suitable to host your Hadoop cluster locally for development. At the time I made use of VMWare…

By David WORMS

Mar 14, 2013

Definitions of machine learning algorithms present in Apache Mahout

Categories: Data Science | Tags: Algorithm, Сlassification, Hadoop, Mahout, Clustering, Machine Learning

Apache Mahout is a machine learning library built for scalability. Its core algorithms for clustering, classfication and batch based collaborative filtering are implemented on top of Apache Hadoop…

By David WORMS

Mar 8, 2013

Virtual machines with static IP for your Hadoop development cluster

Categories: Infrastructure | Tags: Ambari, Hortonworks, Red Hat, VirtualBox, VM, VMware, Cloudera, Network

While I am about to install and test Ambari, this article is the occasion to illustrate how I set up my development environment with multiple virtual machines. Ambari, the deployment and monitoring…

By David WORMS

Feb 27, 2013

Merging multiple files in Hadoop

Categories: Hack | Tags: File system, Hadoop, HDFS

This is a command I used to concatenate the files stored in Hadoop HDFS matching a globing expression into a single file. It uses the “getmerge” utility of but contrary to “getmerge”, the final…

By David WORMS

Jan 12, 2013

E-commerce electronic cigarettes: first impressions with Prestashop

Categories: Tech Radar | Tags: HTML, Java, Node.js

Last year, I had to select and integrate an e-commerce software for the website CigarHit selling electronic cigarettes. Considering that the last e-commerce integration I made dated from 2005, I took…

By David WORMS

Jul 25, 2012

Node CSV version 0.2.1

Categories: Node.js | Tags: CoffeeScript, CSV, Release and features, Streaming

After the announcement of the version 0.2.0 of the Node.js CSV parser at the beginning of october, we are releasing today a new version 0.2.1. This is mostly a bug fix release with enhanced…

By David WORMS

Jul 24, 2012

Node CSV version 0.1 and future developments

Categories: Node.js | Tags: CoffeeScript, CSV, Markdown, Release and features, Streaming

The Node CSV parser has just reach version 0.1 which close the 0.0.x releases. Started almost 2 years ago, the project has received a tremendous amount of participation in the form of bug reports…

By David WORMS

Jul 21, 2012

Convert .flac music files to .mp3 on osx

Categories: Hack | Tags: OS X, File Format

As an osx user for years now, one should know by then that iTunes doesn’t support the flac format. We are now in 2012, I’ve been waiting for this to happen since years know. Loosing patience, dark…

By David WORMS

Jul 20, 2012

Hadoop and R with RHadoop

Categories: Business Intelligence, Data Science | Tags: Thrift, Learning and tutorial, R, Hadoop, HBase, HDFS, MapReduce, Data Analytics

RHadoop is a bridge between R, a language and environment to statistically explore data sets, and Hadoop, a framework that allows for the distributed processing of large data sets across clusters of…

By David WORMS

Jul 19, 2012

Asynchronous array iteration in Node.js with Each

Categories: Node.js | Tags: Asynchronous, CoffeeScript, JavaScript, Release and features

Control flow in Node.js is the sort of library for which almost all the developers have created and publish their own libraries. They usually aim at reducing spaghetti codes made of deep callbacks. I…

By David WORMS

Jul 18, 2012

Installing and using MADlib with PostgreSQL on OSX

Categories: Data Science | Tags: Database, Greenplum, Statistics, PostgreSQL, SQL

We cover basic installation and usage of PostgreSQL and MADlib on OSX and Ubuntu. Instructions for other environments should be similar. PostgreSQL is an Open Source database with enterprise…

By David WORMS

Jul 7, 2012

Node CSV version 0.2 with streaming API

Categories: Node.js | Tags: Data Engineering, CSV, Markdown, Node.js, Streaming

The Node CSV parser in its version 0.2 has just been released. This version is a major enhancement as it aligned the parser with the best Node.js practice in respect of streams. The CSV parser behave…

By David WORMS

Jul 2, 2012

HDFS and Hive storage - comparing file formats and compression methods

Categories: Big Data | Tags: Business intelligence, Hive, ORC, Parquet, File Format

A few days ago, we have conducted a test in order to compare various Hive file formats and compression methods. Among those file formats, some are native to HDFS and apply to all Hadoop users. The…

By David WORMS

Mar 13, 2012

Two Hive UDAF to convert an aggregation to a map

Categories: Data Engineering | Tags: Java, HBase, Hive, File Format

I am publishing two new Hive UDAF to help with maps in Apache Hive. The source code is available on GitHub in two Java classes: “UDAFToMap” and “UDAFToOrderedMap” or you can download the jar file. The…

By David WORMS

Mar 6, 2012

Java versus JS fun, a quote from the Node.js mailing list

Categories: Node.js | Tags: Java, JavaScript, Node.js

I just read that one on the mailing list. I found it relevant enough to share it with those who did not subscribe to it: First Lothar Pfeiler: I still wonder, if it’s cool to have such a big…

By David WORMS

Feb 23, 2012

A fresh look at testing Node.js projects: Mocha, Should and Travis

Categories: DevOps & SRE, Node.js | Tags: DevOps, Mocha, CI/CD, JavaScript, Node.js, Unit tests

Today, I finally decided to spend some time around Travis. It’s been a few weeks since that little green image on top of many GitHub homepages has been buzzing me. Well, to be totally honest, this isn…

By David WORMS

Feb 19, 2012

Coffee script, how do I debug that damn js line?

Categories: Hack, Node.js | Tags: Debug, CoffeeScript, JavaScript, Node.js

Update April 12th, 2012: Pull request adding error reporting to CoffeeScript with line mapping Chances are that, if you code in CoffeeScript, you often find yourself facing a JavaScript exception…

By David WORMS

Feb 15, 2012

Announcing Mecano, a set of functions for system deployment

Categories: DevOps & SRE, Node.js | Tags: Automation, Infrastructure, CoffeeScript, JavaScript, Open source

Update July 2016, Mecano is now renamed Nikita. We are releasing Node Mecano on GitHub which gather common functions used while deploying systems. The idea was to group those functions into a…

By David WORMS

Feb 12, 2012

OS module on steroids with the SIGAR Node binding

Categories: Node.js | Tags: C++, CPU, File system, Metrics, Monitoring, Network

Today we are announcing the first release of the Node binding to the SIGAR library. Visit the project website or the source code repository on GitHub. SIGAR is a cross platform interface for gathering…

By David WORMS

Jan 11, 2012

Timeseries storage in Hadoop and Hive

Categories: Data Engineering | Tags: CRM, timeseries, Tuning, Hadoop, HDFS, Hive, File Format

In the next few weeks, we will be exploring the storage and analytic of a large generated dataset. This dataset is composed of CRM tables associated to one timeserie table of about 7,000 billiard rows…

By David WORMS

Jan 10, 2012

How Node CSV parser may save your weekend

Categories: Hack | Tags: Bash, Hack, CSV, Node.js

Last Friday, an hour before the doors of my customer close for the weekend, a co-worker came to me. He just finished to export 9 CSV files from an Oracle database which he wanted to import into…

By David WORMS

Dec 13, 2011

Node.js is now integrated to the Microsoft Azure platform

Categories: Cloud Computing, Tech Radar | Tags: Linux, Azure, Cloud, Node.js

Node is now a first class citizen in the Microsoft Azure cloud environment alongside .Net, Java and PHP. This integration is the logical consequence of Microsoft’s involvement in the development of…

By David WORMS

Dec 11, 2011

Hadoop and HBase installation on OSX in pseudo-distributed mode

Categories: Big Data, Learning | Tags: Hue, Infrastructure, Hadoop, HBase, Big Data, Deployment

The operating system chosen is OSX but the procedure is not so different for any Unix environment because most of the software is downloaded from the Internet, uncompressed and set manually. Only a…

By David WORMS

Dec 1, 2010

Storage and massive processing with Hadoop

Categories: Big Data | Tags: Hadoop, HDFS, Storage

Apache Hadoop is a system for building shared storage and processing infrastructures for large volumes of data (multiple terabytes or petabytes). Hadoop clusters are used by a wide range of projects…

By David WORMS

Nov 26, 2010

Node HBase, a NodeJs client for Apache HBase

Categories: Big Data, Node.js | Tags: HBase, Big Data, Node.js, REST

HBase is a “column familly” database from the Hadoop ecosystem built on the model of Google BigTable. HBase can accommodate very large volumes of data (tera or peta) while maintaining high…

By David WORMS

Nov 1, 2010

MapReduce introduction

Categories: Big Data | Tags: Java, MapReduce, Big Data, JavaScript

Information systems have more and more data to store and process. Companies like Google, Facebook, Twitter and many others store astronomical amounts of information from their customers and must be…

By David WORMS

Jun 26, 2010

Node.js, JavaScript on the server side

Categories: Front End, Node.js | Tags: HTTP, Server, JavaScript, Node.js

Waiting for the Next Big Language (NBL for Next Big Language), this is now 3 years or more since I predict to my customers a bright future for JavaScript as a programming language for server…

By David WORMS

Jun 12, 2010