Big Data

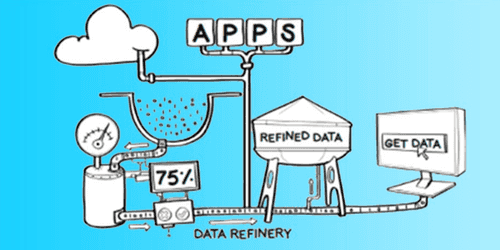

Les données et les connaissances qu’elles contiennent sont essentielles pour permettre aux entreprises d’innover et de se différencier. Arrivant de sources multiples, de l'intérieur du pare-feu jusqu'au edge, la croissance des données de par leur volume, leur variété et leur vitesse entraîne des approches novatrices. Aujourd’hui, les organisations peuvent accumuler d’énormes quantités d’informations dans un Data Lake pour des analyses futures. Pour celles ne disposant pas de l’infrastructure nécessaire, ce Data Lake peut facilement être mis en oeuvre sur le Cloud.

Avec le Big Data, la Business Intelligence entame une nouvelle ère. Hadoop et ses alternatives, les bases de données NoSQL et les fournisseurs de Cloud hébergent et représentent les données structurées et non structurées ainsi que les séries temporelles tels que vos logs et données issues de capteurs. De la collecte jusqu’à la visualisation, l’ensemble de la chaîne de traitement se réalise par batch et en temps réel.

Infrastructures

Environnements cloud, on-premise et hybrides :

- Intégration avec le système d’information

- Déploiements supervisés

- Sécurisation de bout en bout

- Gestion en environnements multi-tenants

- Maintenance en conditions opérationnelles, PRA

- Support niveau 3

Gestion des données

Gouvernance et mise à disposition des données:

- Architecture Big Data et Data Lake

- Modélisation et architecture applicative

- Environnements à forte contrainte de volumétrie et de latence

- Collecte et ingestion en batch et en flux continus

- Raffinement et enrichissement

- Contrôle de la qualité des données

Valorisation des données

Accompagnement des métiers et servir les besoins des projets:

- Restitution et visualisation de données

- Optimisation des flux et des traitements distribués

- Requêtage ad hoc et data mining

- Construction de modèles et d’algorithmes de Machine Learning

- DevOps, SRE et MLOps

Articles associés au Big Data

Introduction to OpenLineage

Cat�égories : Big Data, Data Governance, Infrastructure | Tags : Data Engineering, Infrastructure, Atlas, Data Lake, Data lakehouse, Data Warehouse, Data lineage

OpenLineage is an open-source specification for data lineage. The specification is complemented by Marquez, its reference implementation. Since its launch in late 2020, OpenLineage has been a presence…

19 févr. 2024

Installation Guide to TDP, the 100% open source big data platform

Catégories : Big Data, Infrastructure | Tags : Infrastructure, VirtualBox, Hadoop, Vagrant, TDP

The Trunk Data Platform (TDP) is a 100% open source big data distribution, based on Apache Hadoop and compatible with HDP 3.1. Initiated in 2021 by EDF, the DGFiP and Adaltas, the project is governed…

Par Paul FARAULT

18 oct. 2023

New TDP website launched

Catégories : Big Data | Tags : Programming, Ansible, Hadoop, Python, TDP

The new TDP (Trunk Data Platform) website is online. We invite you to browse its pages to discover the platform, stay informed, and cultivate contact with the TDP community. TDP is a completely open…

Par David WORMS

3 oct. 2023

CDP part 6: end-to-end data lakehouse ingestion pipeline with CDP

Catégories : Big Data, Data Engineering, Learning | Tags : Business intelligence, Data Engineering, Iceberg, NiFi, Spark, Big Data, Cloudera, CDP, Data Analytics, Data Lake, Data Warehouse

In this hands-on lab session we demonstrate how to build an end-to-end big data solution with Cloudera Data Platform (CDP) Public Cloud, using the infrastructure we have deployed and configured over…

Par Tobias CHAVARRIA

24 juil. 2023

CDP part 5: user permissions management on CDP Public Cloud

Catégories : Big Data, Cloud Computing, Data Governance | Tags : Ranger, Cloudera, CDP, Data Warehouse

When you create a user or a group in CDP, it requires permissions to access resources and use the Data Services. This article is the fifth in a series of six: CDP part 1: introduction to end-to-end…

Par Tobias CHAVARRIA

18 juil. 2023

CDP part 4: user management on CDP Public Cloud with Keycloak

Catégories : Big Data, Cloud Computing, Data Governance | Tags : EC2, Big Data, CDP, Docker Compose, Keycloak, SSO

Previous articles of the serie cover the deployment of a CDP Public Cloud environment. All the components are ready for use and it is time to make the environment available to other users to explore…

Par Tobias CHAVARRIA

4 juil. 2023

CDP part 3: Data Services activation on CDP Public Cloud environment

Catégories : Big Data, Cloud Computing, Infrastructure | Tags : Infrastructure, AWS, Big Data, Cloudera, CDP

One of the big selling points of Cloudera Data Platform (CDP) is their mature managed service offering. These are easy to deploy on-premises, in the public cloud or as part of a hybrid solution. The…

Par Albert KONRAD

27 juin 2023

CDP part 2: CDP Public Cloud deployment on AWS

Catégories : Big Data, Cloud Computing, Infrastructure | Tags : Infrastructure, AWS, Big Data, Cloud, Cloudera, CDP, Cloudera Manager

The Cloudera Data Platform (CDP) Public Cloud provides the foundation upon which full featured data lakes are created. In a previous article, we introduced the CDP platform. This article is the second…

Par Albert KONRAD

19 juin 2023

Data platform requirements and expectations

Catégories : Big Data, Infrastructure | Tags : Data Engineering, Data Governance, Data Analytics, Data Hub, Data Lake, Data lakehouse, Data Science

A big data platform is a complex and sophisticated system that enables organizations to store, process, and analyze large volumes of data from a variety of sources. It is composed of several…

Par David WORMS

23 mars 2023

Operating Kafka in Kubernetes with Strimzi

Catégories : Big Data, Containers Orchestration, Infrastructure | Tags : Kafka, Big Data, Kubernetes, Open source, Streaming

Kubernetes is not the first platform that comes to mind to run Apache Kafka clusters. Indeed, Kafka’s strong dependency on storage might be a pain point regarding Kubernetes’ way of doing things when…

Par Leo SCHOUKROUN

7 mars 2023

Dive into tdp-lib, the SDK in charge of TDP cluster management

Catégories : Big Data, Infrastructure | Tags : Programming, Ansible, Hadoop, Python, TDP

All the deployments are automated and Ansible plays a central role. With the growing complexity of the code base, a new system was needed to overcome the Ansible limitations which will enable us to…

Par Guillaume BOUTRY

24 janv. 2023

Adaltas Summit 2022 Morzine

Catégories : Big Data, Adaltas Summit 2022 | Tags : Data Engineering, Infrastructure, Iceberg, Container, Data lakehouse, Docker, Kubernetes

For its third edition, the whole Adaltas crew is gathering in Morzine for a whole week with 2 days dedicated to technology the 15th and the 16Th of september 2022. The speakers choose one of the…

Par David WORMS

13 janv. 2023

Big data infrastructure internship

Catégories : Big Data, Data Engineering, DevOps & SRE, Infrastructure | Tags : Infrastructure, Hadoop, Big Data, Cluster, Internship, Kubernetes, TDP

Job description Big Data and distributed computing are at the core of Adaltas. We accompagny our partners in the deployment, maintenance, and optimization of some of the largest clusters in France…

Par Stephan BAUM

2 déc. 2022

Ceph object storage within a Kubernetes cluster with Rook

Catégories : Big Data, Data Governance, Learning | Tags : Amazon S3, Big Data, Ceph, Cluster, Data Lake, Kubernetes, Storage

Ceph is a distributed all-in-one storage system. Reliable and mature, its first stable version was released in 2012 and has since then been the reference for open source storage. Ceph’s main perk is…

Par Luka BIGOT

4 août 2022

MinIO object storage within a Kubernetes cluster

Catégories : Big Data, Data Governance, Learning | Tags : Amazon S3, Big Data, Cluster, Data Lake, Kubernetes, Storage

MinIO is a popular object storage solution. Often recommended for its simple setup and ease of use, it is not only a great way to get started with object storage: it also provides excellent…

Par Luka BIGOT

9 juil. 2022

Architecture of object-based storage and S3 standard specifications

Catégories : Big Data, Data Governance | Tags : Database, API, Amazon S3, Big Data, Data Lake, Storage

Object storage has been growing in popularity among data storage architectures. Compared to file systems and block storage, object storage faces no limitations when handling petabytes of data. By…

Par Luka BIGOT

20 juin 2022

Comparison of database architectures: data warehouse, data lake and data lakehouse

Catégories : Big Data, Data Engineering | Tags : Data Governance, Infrastructure, Iceberg, Parquet, Spark, Data Lake, Data lakehouse, Data Warehouse, File Format

Database architectures have experienced constant innovation, evolving with the appearence of new use cases, technical constraints, and requirements. From the three database structures we are comparing…

Par Gonzalo ETSE

17 mai 2022

Introducing Trunk Data Platform: the Open-Source Big Data Distribution Curated by TOSIT

Catégories : Big Data, DevOps & SRE, Infrastructure | Tags : DevOps, Hortonworks, Ansible, Hadoop, HBase, Knox, Ranger, Spark, Cloudera, CDP, CDH, Open source, TDP

Ever since Cloudera and Hortonworks merged, the choice of commercial Hadoop distributions for on-prem workloads essentially boils down to CDP Private Cloud. CDP can be seen as the “best of both worlds…

Par Leo SCHOUKROUN

14 avr. 2022

Apache HBase: RegionServers co-location

Catégories : Big Data, Adaltas Summit 2021, Infrastructure | Tags : Ambari, Database, Infrastructure, Tuning, Hadoop, HBase, Big Data, HDP, Storage

RegionServers are the processes that manage the storage and retrieval of data in Apache HBase, the non-relational column-oriented database in Apache Hadoop. It is through their daemons that any CRUD…

Par Pierre BERLAND

22 févr. 2022

Using Cloudera Deploy to install Cloudera Data Platform (CDP) Private Cloud

Catégories : Big Data, Cloud Computing | Tags : Ansible, Cloudera, CDP, Cluster, Data Warehouse, Vagrant, IaC

Following our recent Cloudera Data Platform (CDP) overview, we cover how to deploy CDP private Cloud on you local infrastructure. It is entirely automated with the Ansible cookbooks published by…

23 juil. 2021

An overview of Cloudera Data Platform (CDP)

Catégories : Big Data, Cloud Computing, Data Engineering | Tags : SDX, Big Data, Cloud, Cloudera, CDP, CDH, Data Analytics, Data Hub, Data Lake, Data lakehouse, Data Warehouse

Cloudera Data Platform (CDP) is a cloud computing platform for businesses. It provides integrated and multifunctional self-service tools in order to analyze and centralize data. It brings security and…

19 juil. 2021

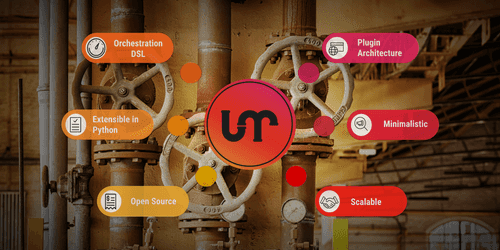

Apache Liminal: when MLOps meets GitOps

Catégories : Big Data, Containers Orchestration, Data Engineering, Data Science, Tech Radar | Tags : Data Engineering, CI/CD, Data Science, Deep Learning, Deployment, Docker, GitOps, Kubernetes, Machine Learning, MLOps, Open source, Python, TensorFlow

Apache Liminal is an open-source software which proposes a solution to deploy end-to-end Machine Learning pipelines. Indeed it permits to centralize all the steps needed to construct Machine Learning…

Par Aargan COINTEPAS

31 mars 2021

TensorFlow Extended (TFX): the components and their functionalities

Catégories : Big Data, Data Engineering, Data Science, Learning | Tags : Beam, Data Engineering, Pipeline, CI/CD, Data Science, Deep Learning, Deployment, Machine Learning, MLOps, Open source, Python, TensorFlow

Putting Machine Learning (ML) and Deep Learning (DL) models in production certainly is a difficult task. It has been recognized as more failure-prone and time consuming than the modeling itself, yet…

5 mars 2021

Build your open source Big Data distribution with Hadoop, HBase, Spark, Hive & Zeppelin

Catégories : Big Data, Infrastructure | Tags : Maven, Hadoop, HBase, Hive, Spark, Git, Release and features, TDP, Unit tests

The Hadoop ecosystem gave birth to many popular projects including HBase, Spark and Hive. While technologies like Kubernetes and S3 compatible object storages are growing in popularity, HDFS and YARN…

Par Leo SCHOUKROUN

18 déc. 2020

Connecting to ADLS Gen2 from Hadoop (HDP) and Nifi (HDF)

Catégories : Big Data, Cloud Computing, Data Engineering | Tags : Hadoop, HDFS, NiFi, Authentication, Authorization, Azure, Azure Data Lake Storage (ADLS), OAuth2

As data projects built in the Cloud are becoming more and more frequent, a common use case is to interact with Cloud storage from an existing on premise Big Data platform. Microsoft Azure recently…

Par Gauthier LEONARD

5 nov. 2020

Rebuilding HDP Hive: patch, test and build

Catégories : Big Data, Infrastructure | Tags : Maven, Java, Hive, Git, GitHub, Release and features, TDP, Unit tests

The Hortonworks HDP distribution will soon be deprecated in favor of Cloudera’s CDP. One of our clients wanted a new Apache Hive feature backported into HDP 2.6.0. We thought it was a good opportunity…

Par Leo SCHOUKROUN

6 oct. 2020

Installing Hadoop from source: build, patch and run

Catégories : Big Data, Infrastructure | Tags : Maven, Java, LXD, Hadoop, HDFS, Docker, TDP, Unit tests

Commercial Apache Hadoop distributions have come and gone. The two leaders, Cloudera and Hortonworks, have merged: HDP is no more and CDH is now CDP. MapR has been acquired by HP and IBM BigInsights…

Par Leo SCHOUKROUN

4 août 2020

Download datasets into HDFS and Hive

Catégories : Big Data, Data Engineering | Tags : Business intelligence, Data Engineering, Data structures, Database, Hadoop, HDFS, Hive, Big Data, Data Analytics, Data Lake, Data lakehouse, Data Warehouse

Introduction Nowadays, the analysis of large amounts of data is becoming more and more possible thanks to Big data technology (Hadoop, Spark,…). This explains the explosion of the data volume and the…

Par Aida NGOM

31 juil. 2020

Comparison of different file formats in Big Data

Catégories : Big Data, Data Engineering | Tags : Business intelligence, Data structures, Avro, HDFS, ORC, Parquet, Batch processing, Big Data, CSV, JavaScript Object Notation (JSON), Kubernetes, Protocol Buffers

In data processing, there are different types of files formats to store your data sets. Each format has its own pros and cons depending upon the use cases and exists to serve one or several purposes…

Par Aida NGOM

23 juil. 2020

Automate a Spark routine workflow from GitLab to GCP

Catégories : Big Data, Cloud Computing, Containers Orchestration | Tags : Learning and tutorial, Airflow, Spark, CI/CD, GitLab, GitOps, GCP, Terraform

A workflow consists in automating a succession of tasks to be carried out without human intervention. It is an important and widespread concept which particularly apply to operational environments…

16 juin 2020

Introducing Apache Airflow on AWS

Catégories : Big Data, Cloud Computing, Containers Orchestration | Tags : PySpark, Learning and tutorial, Airflow, Oozie, Spark, AWS, Docker, Python

Apache Airflow offers a potential solution to the growing challenge of managing an increasingly complex landscape of data management tools, scripts and analytics processes. It is an open-source…

Par Aargan COINTEPAS

5 mai 2020

Cloudera CDP and Cloud migration of your Data Warehouse

Catégories : Big Data, Cloud Computing | Tags : Azure, Cloudera, Data Hub, Data Lake, Data Warehouse

While one of our customer is anticipating a move to the Cloud and with the recent announcement of Cloudera CDP availability mi-september during the Strata conference, it seems like the appropriate…

Par David WORMS

16 déc. 2019

Should you move your Big Data and Data Lake to the Cloud

Catégories : Big Data, Cloud Computing | Tags : DevOps, AWS, Azure, Cloud, CDP, Databricks, GCP

Should you follow the trend and migrate your data, workflows and infrastructure to GCP, AWS and Azure? During the Strata Data Conference in New-York, a general focus was put on moving customer’s Big…

Par Joris RUMMENS

9 déc. 2019

InfraOps & DevOps Internship - build a Big Data & Kubernetes PaaS

Catégories : Big Data, Containers Orchestration | Tags : DevOps, LXD, Hadoop, Kafka, Spark, Ceph, Internship, Kubernetes, NoSQL

Context The acquisition of a high-capacity cluster is in line with Adaltas’ desire to build a PAAS-type offering to use and to provide Big Data and container orchestration platforms. The platforms are…

Par David WORMS

26 nov. 2019

Notes on the Cloudera Open Source licensing model

Catégories : Big Data | Tags : CDSW, License, Cloudera Manager, Open source

Following the publication of its Open Source licensing strategy on July 10, 2019 in an article called “our Commitment to Open Source Software”, Cloudera broadcasted a webinar yesterday October 2…

Par David WORMS

25 oct. 2019

Machine Learning model deployment

Catégories : Big Data, Data Engineering, Data Science, DevOps & SRE | Tags : DevOps, Operation, AI, Cloud, Machine Learning, MLOps, On-premises, Schema

“Enterprise Machine Learning requires looking at the big picture […] from a data engineering and a data platform perspective,” lectured Justin Norman during the talk on the deployment of Machine…

Par Oskar RYNKIEWICZ

30 sept. 2019

Running Apache Hive 3, new features and tips and tricks

Catégories : Big Data, Business Intelligence, DataWorks Summit 2019 | Tags : JDBC, LLAP, Druid, Hadoop, Hive, Kafka, Release and features

Apache Hive 3 brings a bunch of new and nice features to the data warehouse. Unfortunately, like many major FOSS releases, it comes with a few bugs and not much documentation. It is available since…

Par Gauthier LEONARD

25 juil. 2019

Auto-scaling Druid with Kubernetes

Catégories : Big Data, Business Intelligence, Containers Orchestration | Tags : Helm, Metrics, OLAP, Operation, Container Orchestration, EC2, Druid, Cloud, CNCF, Data Analytics, Kubernetes, Prometheus, Python

Apache Druid is an open-source analytics data store which could leverage the auto-scaling abilities of Kubernetes due to its distributed nature and its reliance on memory. I was inspired by the talk…

Par Leo SCHOUKROUN

16 juil. 2019

Druid and Hive integration

Catégories : Big Data, Business Intelligence, Tech Radar | Tags : LLAP, OLAP, Druid, Hive, Data Analytics, SQL

This article covers the integration between Hive Interactive (LDAP) and Druid. One can see it as a complement of the Ultra-fast OLAP Analytics with Apache Hive and Druid article. Tools description…

Par Pierre SAUVAGE

17 juin 2019

Spark Streaming part 3: DevOps, tools and tests for Spark applications

Catégories : Big Data, Data Engineering, DevOps & SRE | Tags : DevOps, Learning and tutorial, Spark, Apache Spark Streaming

Whenever services are unavailable, businesses experience large financial losses. Spark Streaming applications can break, like any other software application. A streaming application operates on data…

Par Oskar RYNKIEWICZ

31 mai 2019

Apache Knox made easy!

Catégories : Big Data, Cyber Security, Adaltas Summit 2018 | Tags : LDAP, Active Directory, Knox, Ranger, Kerberos, REST

Apache Knox is the secure entry point of a Hadoop cluster, but can it also be the entry point for my REST applications? Apache Knox overview Apache Knox is an application gateway for interacting in a…

Par Michael HATOUM

4 févr. 2019

Hadoop cluster takeover with Apache Ambari

Catégories : Big Data, DevOps & SRE, Adaltas Summit 2018 | Tags : Ambari, Automation, iptables, Nikita, Systemd, Cluster, HDP, Kerberos, Node, Node.js, REST

We recently migrated a large production Hadoop cluster from a “manual” automated install to Apache Ambari, we called this the Ambari Takeover. This is a risky process and we will detail why this…

Par Leo SCHOUKROUN

15 nov. 2018

Deploying a secured Flink cluster on Kubernetes

Catégories : Big Data | Tags : Encryption, Flink, HDFS, Kafka, Elasticsearch, Kerberos, SSL/TLS

When deploying secured Flink applications inside Kubernetes, you are faced with two choices. Assuming your Kubernetes is secure, you may rely on the underlying platform or rely on Flink native…

Par David WORMS

8 oct. 2018

Clusters and workloads migration from Hadoop 2 to Hadoop 3

Catégories : Big Data, Infrastructure | Tags : Slider, Erasure Coding, Rolling Upgrade, HDFS, Spark, YARN, Docker

Hadoop 2 to Hadoop 3 migration is a hot subject. How to upgrade your clusters, which features present in the new release may solve current problems and bring new opportunities, how are your current…

Par Lucas BAKALIAN

25 juil. 2018

Curing the Kafka blindness with the UI manager

Catégories : Big Data | Tags : Ambari, Hortonworks, HDF, JMX, UI, Kafka, Ranger, HDP

Today it’s really difficult for developers, operators and managers to visualize and monitor what happens in a Kafka cluster. This articles covers a new graphical interface to oversee Kafka. It was…

Par Lucas BAKALIAN

20 juin 2018

Data Lake ingestion best practices

Catégories : Big Data, Data Engineering | Tags : Data Governance, HDF, Operation, Avro, Hive, NiFi, ORC, Spark, Data Lake, File Format, Protocol Buffers, Registry, Schema

Creating a Data Lake requires rigor and experience. Here are some good practices around data ingestion both for batch and stream architectures that we recommend and implement with our customers…

Par David WORMS

18 juin 2018

Apache Hadoop YARN 3.0 – State of the union

Catégories : Big Data, DataWorks Summit 2018 | Tags : GPU, Hortonworks, Hadoop, HDFS, MapReduce, YARN, Cloudera, Data Science, Docker, Release and features

This article covers the ”Apache Hadoop YARN: state of the union” talk held by Wangda Tan from Hortonworks during the Dataworks Summit 2018. What is Apache YARN? As a reminder, YARN is one of the two…

Par Lucas BAKALIAN

31 mai 2018

Running Enterprise Workloads in the Cloud with Cloudbreak

Catégories : Big Data, Cloud Computing, DataWorks Summit 2018 | Tags : Cloudbreak, Operation, Hadoop, AWS, Azure, GCP, HDP, OpenStack

This article is based on Peter Darvasi and Richard Doktorics’ talk Running Enterprise Workloads in the Cloud at the DataWorks Summit 2018 in Berlin. It presents Hortonworks’ automated deployment tool…

Par Joris RUMMENS

28 mai 2018

Omid: Scalable and highly available transaction processing for Apache Phoenix

Catégories : Big Data, DataWorks Summit 2018 | Tags : Omid, Phoenix, Transaction, ACID, HBase, SQL

Apache Omid provides a transactional layer on top of key/value NoSQL databases. In practice, it is usually used on top of Apache HBase. Credits to Ohad Shacham for his talk and his work for Apache…

Par Xavier HERMAND

24 mai 2018

Present and future of Hadoop workflow scheduling: Oozie 5.x

Catégories : Big Data, DataWorks Summit 2018 | Tags : Hadoop, Hive, Oozie, Sqoop, CDH, HDP, REST

During the DataWorks Summit Europe 2018 in Berlin, I had the opportunity to attend a breakout session on Apache Oozie. It covers the new features released in Oozie 5.0, including future features of…

Par Leo SCHOUKROUN

23 mai 2018

Essential questions about Time Series

Catégories : Big Data | Tags : Druid, HBase, Hive, ORC, Data Science, Elasticsearch, Grafana, IOT

Today, the bulk of Big Data is temporal. We see it in the media and among our customers: smart meters, banking transactions, smart factories, connected vehicles … IoT and Big Data go hand in hand. We…

Par David WORMS

18 mars 2018

Ambari - How to blueprint

Catégories : Big Data, DevOps & SRE | Tags : Ambari, Automation, DevOps, Operation, Ranger, REST

As infrastructure engineers at Adaltas, we deploy Hadoop clusters. A lot of them. Let’s see how to automate this process with REST requests. While really handy for deploying one or two clusters, the…

Par Joris RUMMENS

17 janv. 2018

Cloudera Sessions Paris 2017

Catégories : Big Data, Events | Tags : Altus, CDSW, SDX, EC2, Azure, Cloudera, CDH, Data Science, PaaS

Adaltas was at the Cloudera Sessions on October 5, where Cloudera showcased their new products and offerings. Below you’ll find a summary of what we witnessed. Note: the information were aggregated in…

Par César BEREZOWSKI

16 oct. 2017

Change Ambari's topbar color

Catégories : Big Data, Hack | Tags : Ambari, Front-end

We recently had a client that has multiple environments (Production, Integration, Testing, …) running on HDP and managed using one Ambari instance per cluster. One of the questions that came up was…

Par César BEREZOWSKI

9 juil. 2017

MiNiFi: Data at Scales & the Values of Starting Small

Catégories : Big Data, DevOps & SRE, Infrastructure | Tags : MiNiFi, C++, HDF, NiFi, Cloudera, HDP, IOT

This conference presented rapidly Apache NiFi and explained where MiNiFi came from: basically it’s a NiFi minimal agent to deploy on small devices to bring data to a cluster’s NiFi pipeline (ex: IoT…

Par César BEREZOWSKI

8 juil. 2017

HDP cluster monitoring

Catégories : Big Data, DevOps & SRE, Infrastructure | Tags : Alert, Ambari, Metrics, Monitoring, HDP, REST

With the current growth of BigData technologies, more and more companies are building their own clusters in hope to get some value of their data. One main concern while building these infrastructures…

Par Joris RUMMENS

5 juil. 2017

Advanced multi-tenant Hadoop and Zookeeper protection

Catégories : Big Data, Infrastructure | Tags : DoS, iptables, Operation, Scalability, Zookeeper, Clustering, Consensus

Zookeeper is a critical component to Hadoop’s high availability operation. The latter protects itself by limiting the number of maximum connections (maxConns = 400). However Zookeeper does not protect…

Par Pierre SAUVAGE

5 juil. 2017

Hive Metastore HA with DBTokenStore: Failed to initialize master key

Catégories : Big Data, DevOps & SRE | Tags : Infrastructure, Hive, Bug

This article describes my little adventure around a startup error with the Hive Metastore. It shall be reproducable with any secure installation, meaning with Kerberos, with high availability enabled…

Par David WORMS

21 juil. 2016

Get in control of your workflows with Apache Airflow

Catégories : Big Data, Tech Radar | Tags : DevOps, Airflow, Cloud, Python

Below is a compilation of my notes taken during the presentation of Apache Airflow by Christian Trebing from BlueYonder. Introduction Use case: how to handle data coming in regularly from customers…

Par César BEREZOWSKI

17 juil. 2016

Hive, Calcite and Druid

Catégories : Big Data | Tags : Business intelligence, Database, Druid, Hadoop, Hive

BI/OLAP requires interactive visualization of complex data streams: Real time bidding events User activity streams Voice call logs Network trafic flows Firewall events Application KPIs Traditionnal…

Par David WORMS

14 juil. 2016

Red Hat Storage Gluster and its integration with Hadoop

Catégories : Big Data | Tags : GlusterFS, Red Hat, Hadoop, HDFS, Storage

I had the opportunity to be introduced to Red Hat Storage and Gluster in a joint presentation by Red Hat France and the company StartX. I have here recompiled my notes, at least partially. I will…

Par David WORMS

3 juil. 2015

Composants for CDH and HDP

Catégories : Big Data | Tags : Flume, Hortonworks, Hadoop, Hive, Oozie, Sqoop, Zookeeper, Cloudera, CDH, HDP

I was interested to compare the different components distributed by Cloudera and HortonWorks. This also gives us an idea of the versions packaged by the two distributions. At the time of this writting…

Par David WORMS

22 sept. 2013

State of the Hadoop open-source ecosystem in early 2013

Catégories : Big Data | Tags : Flume, Mesos, Phoenix, Pig, Hadoop, Kafka, Mahout, Data Science

Hadoop is already a large ecosystem and my guess is that 2013 will be the year where it grows even larger. There are some pieces that we no longer need to present. ZooKeeper, hbase, Hive, Pig, Flume…

Par David WORMS

8 juil. 2013

Oracle and Hive, how data are published?

Catégories : Big Data | Tags : Oracle, Hive, Sqoop, Data Lake

In the past few days, I’ve published 3 related articles: a first one covering the option to integrate Oracle and Hadoop, a second one explaining how to install and use the Oracle SQL Connector with…

Par David WORMS

6 juil. 2013

The state of Hadoop distributions

Catégories : Big Data | Tags : Hortonworks, Intel, Oracle, Hadoop, Cloudera

Apache Hadoop is of course made available for download on its official webpage. However, downloading and installing the several components that make a Hadoop cluster is not an easy task and is a…

Par David WORMS

11 mai 2013

HDFS and Hive storage - comparing file formats and compression methods

Catégories : Big Data | Tags : Business intelligence, Hive, ORC, Parquet, File Format

A few days ago, we have conducted a test in order to compare various Hive file formats and compression methods. Among those file formats, some are native to HDFS and apply to all Hadoop users. The…

Par David WORMS

13 mars 2012

Hadoop and HBase installation on OSX in pseudo-distributed mode

Catégories : Big Data, Learning | Tags : Hue, Infrastructure, Hadoop, HBase, Big Data, Deployment

The operating system chosen is OSX but the procedure is not so different for any Unix environment because most of the software is downloaded from the Internet, uncompressed and set manually. Only a…

Par David WORMS

1 déc. 2010

Storage and massive processing with Hadoop

Catégories : Big Data | Tags : Hadoop, HDFS, Storage

Apache Hadoop is a system for building shared storage and processing infrastructures for large volumes of data (multiple terabytes or petabytes). Hadoop clusters are used by a wide range of projects…

Par David WORMS

26 nov. 2010

Node HBase, a NodeJs client for Apache HBase

Catégories : Big Data, Node.js | Tags : HBase, Big Data, Node.js, REST

HBase is a “column familly” database from the Hadoop ecosystem built on the model of Google BigTable. HBase can accommodate very large volumes of data (tera or peta) while maintaining high…

Par David WORMS

1 nov. 2010

MapReduce introduction

Catégories : Big Data | Tags : Java, MapReduce, Big Data, JavaScript

Information systems have more and more data to store and process. Companies like Google, Facebook, Twitter and many others store astronomical amounts of information from their customers and must be…

Par David WORMS

26 juin 2010