Data Engineering

La donnée est l’énergie qui alimente la transformation digitale. Les développeurs la consomme dans leurs applicatifs. Les Data Analysts la fouille, la requête et la partage. Les Data Scientists alimentent leurs algorithmes avec. Les Data Engineers ont la responsabilité de mettre en place la chaîne de valeur qui inclue la collecte, le nettoyage, l’enrichissement et la mise à disposition des données.

Gérer le passage à l’échelle, garantir la sécurité et l’intégrité des données, être tolérant aux pannes, manipuler des données par lots ou en flux continu, valider les schémas, publier les APIs, sélectionner les formats, modèles et bases de données appropriés à leurs expositions sont autant de prérogatives à la charge du Data Engineer. De son travail découle la confiance et les succès de ceux qui consomme et exploitent la donnée.

Articles associés au Data Engineering

CDP part 6: end-to-end data lakehouse ingestion pipeline with CDP

Catégories : Big Data, Data Engineering, Learning | Tags : Business intelligence, Data Engineering, Iceberg, NiFi, Spark, Big Data, Cloudera, CDP, Data Analytics, Data Lake, Data Warehouse

In this hands-on lab session we demonstrate how to build an end-to-end big data solution with Cloudera Data Platform (CDP) Public Cloud, using the infrastructure we have deployed and configured over…

Par Tobias CHAVARRIA

24 juil. 2023

CDP part 1: introduction to end-to-end data lakehouse architecture with CDP

Catégories : Cloud Computing, Data Engineering, Infrastructure | Tags : Data Engineering, Hortonworks, Iceberg, AWS, Azure, Big Data, Cloud, Cloudera, CDP, Cloudera Manager, Data Warehouse

Cloudera Data Platform (CDP) is a hybrid data platform for big data transformation, machine learning and data analytics. In this series we describe how to build and use an end-to-end big data…

Par Stephan BAUM

8 juin 2023

Keycloak deployment in EC2

Catégories : Cloud Computing, Data Engineering, Infrastructure | Tags : Security, EC2, Authentication, AWS, Docker, Keycloak, SSL/TLS, SSO

Why use Keycloak Keycloak is an open-source identity provider (IdP) using single sign-on (SSO). An IdP is a tool to create, maintain, and manage identity information for principals and to provide…

Par Stephan BAUM

14 mars 2023

Big data infrastructure internship

Catégories : Big Data, Data Engineering, DevOps & SRE, Infrastructure | Tags : Infrastructure, Hadoop, Big Data, Cluster, Internship, Kubernetes, TDP

Job description Big Data and distributed computing are at the core of Adaltas. We accompagny our partners in the deployment, maintenance, and optimization of some of the largest clusters in France…

Par Stephan BAUM

2 déc. 2022

Comparison of database architectures: data warehouse, data lake and data lakehouse

Catégories : Big Data, Data Engineering | Tags : Data Governance, Infrastructure, Iceberg, Parquet, Spark, Data Lake, Data lakehouse, Data Warehouse, File Format

Database architectures have experienced constant innovation, evolving with the appearence of new use cases, technical constraints, and requirements. From the three database structures we are comparing…

Par Gonzalo ETSE

17 mai 2022

Databricks logs collection with Azure Monitor at a Workspace Scale

Catégories : Cloud Computing, Data Engineering, Adaltas Summit 2021 | Tags : Metrics, Monitoring, Spark, Azure, Databricks, Log4j

Databricks is an optimized data analytics platform based on Apache Spark. Monitoring Databricks plateform is crucial to ensure data quality, job performance, and security issues by limiting access to…

Par Claire PLAYE

10 mai 2022

An overview of Cloudera Data Platform (CDP)

Catégories : Big Data, Cloud Computing, Data Engineering | Tags : SDX, Big Data, Cloud, Cloudera, CDP, CDH, Data Analytics, Data Hub, Data Lake, Data lakehouse, Data Warehouse

Cloudera Data Platform (CDP) is a cloud computing platform for businesses. It provides integrated and multifunctional self-service tools in order to analyze and centralize data. It brings security and…

19 juil. 2021

Self-Paced training from Databricks: a guide to self-enablement on Big Data & AI

Catégories : Data Engineering, Learning | Tags : Cloud, Data Lake, Databricks, Delta Lake, MLflow

Self-paced trainings are proposed by Databricks inside their Academy program. The price is $ 2000 USD for unlimited access to the training courses for a period of 1 year, but also free for customers…

Par Anna KNYAZEVA

26 mai 2021

Find your way into data related Microsoft Azure certifications

Catégories : Cloud Computing, Data Engineering | Tags : Data Governance, Azure, Data Science

Microsoft Azure has certification paths for many technical job roles such as developer, Data Engineer, Data Scientist and solution architect among others. Each of these certifications consists of…

Par Barthelemy NGOM

14 avr. 2021

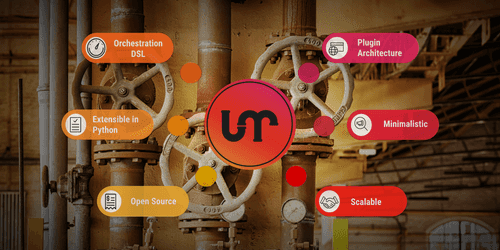

Apache Liminal: when MLOps meets GitOps

Catégories : Big Data, Containers Orchestration, Data Engineering, Data Science, Tech Radar | Tags : Data Engineering, CI/CD, Data Science, Deep Learning, Deployment, Docker, GitOps, Kubernetes, Machine Learning, MLOps, Open source, Python, TensorFlow

Apache Liminal is an open-source software which proposes a solution to deploy end-to-end Machine Learning pipelines. Indeed it permits to centralize all the steps needed to construct Machine Learning…

Par Aargan COINTEPAS

31 mars 2021

Storage size and generation time in popular file formats

Catégories : Data Engineering, Data Science | Tags : Avro, HDFS, Hive, ORC, Parquet, Big Data, Data Lake, File Format, JavaScript Object Notation (JSON)

Choosing an appropriate file format is essential, whether your data transits on the wire or is stored at rest. Each file format comes with its own advantages and disadvantages. We covered them in a…

Par Barthelemy NGOM

22 mars 2021

TensorFlow Extended (TFX): the components and their functionalities

Catégories : Big Data, Data Engineering, Data Science, Learning | Tags : Beam, Data Engineering, Pipeline, CI/CD, Data Science, Deep Learning, Deployment, Machine Learning, MLOps, Open source, Python, TensorFlow

Putting Machine Learning (ML) and Deep Learning (DL) models in production certainly is a difficult task. It has been recognized as more failure-prone and time consuming than the modeling itself, yet…

5 mars 2021

Connecting to ADLS Gen2 from Hadoop (HDP) and Nifi (HDF)

Catégories : Big Data, Cloud Computing, Data Engineering | Tags : Hadoop, HDFS, NiFi, Authentication, Authorization, Azure, Azure Data Lake Storage (ADLS), OAuth2

As data projects built in the Cloud are becoming more and more frequent, a common use case is to interact with Cloud storage from an existing on premise Big Data platform. Microsoft Azure recently…

Par Gauthier LEONARD

5 nov. 2020

Experiment tracking with MLflow on Databricks Community Edition

Catégories : Data Engineering, Data Science, Learning | Tags : Spark, Databricks, Deep Learning, Delta Lake, Machine Learning, MLflow, Notebook, Python, Scikit-learn

Introduction to Databricks Community Edition and MLflow Every day the number of tools helping Data Scientists to build models faster increases. Consequently, the need to manage the results and the…

10 sept. 2020

Download datasets into HDFS and Hive

Catégories : Big Data, Data Engineering | Tags : Business intelligence, Data Engineering, Data structures, Database, Hadoop, HDFS, Hive, Big Data, Data Analytics, Data Lake, Data lakehouse, Data Warehouse

Introduction Nowadays, the analysis of large amounts of data is becoming more and more possible thanks to Big data technology (Hadoop, Spark,…). This explains the explosion of the data volume and the…

Par Aida NGOM

31 juil. 2020

Comparison of different file formats in Big Data

Catégories : Big Data, Data Engineering | Tags : Business intelligence, Data structures, Avro, HDFS, ORC, Parquet, Batch processing, Big Data, CSV, JavaScript Object Notation (JSON), Kubernetes, Protocol Buffers

In data processing, there are different types of files formats to store your data sets. Each format has its own pros and cons depending upon the use cases and exists to serve one or several purposes…

Par Aida NGOM

23 juil. 2020

Importing data to Databricks: external tables and Delta Lake

Catégories : Data Engineering, Data Science, Learning | Tags : Parquet, AWS, Amazon S3, Azure Data Lake Storage (ADLS), Databricks, Delta Lake, Python

During a Machine Learning project we need to keep track of the training data we are using. This is important for audit purposes and for assessing the performance of the models, developed at a later…

21 mai 2020

Optimization of Spark applications in Hadoop YARN

Catégories : Data Engineering, Learning | Tags : Tuning, Hadoop, Spark, Python

Apache Spark is an in-memory data processing tool widely used in companies to deal with Big Data issues. Running a Spark application in production requires user-defined resources. This article…

30 mars 2020

MLflow tutorial: an open source Machine Learning (ML) platform

Catégories : Data Engineering, Data Science, Learning | Tags : AWS, Azure, Databricks, Deep Learning, Deployment, Machine Learning, MLflow, MLOps, Python, Scikit-learn

Introduction and principles of MLflow With increasingly cheaper computing power and storage and at the same time increasing data collection in all walks of life, many companies integrated Data Science…

23 mars 2020

Logstash pipelines remote configuration and self-indexing

Catégories : Data Engineering, Infrastructure | Tags : Docker, Elasticsearch, Kibana, Logstash, Log4j

Logstash is a powerful data collection engine that integrates in the Elastic Stack (Elasticsearch - Logstash - Kibana). The goal of this article is to show you how to deploy a fully managed Logstash…

13 déc. 2019

Internship Data Science & Data Engineer - ML in production and streaming data ingestion

Catégories : Data Engineering, Data Science | Tags : DevOps, Flink, Hadoop, HBase, Kafka, Spark, Internship, Kubernetes, Python

Context The exponential evolution of data has turned the industry upside down by redefining data storage, processing and data ingestion pipelines. Mastering these methods considerably facilitates…

Par David WORMS

26 nov. 2019

Insert rows in BigQuery tables with complex columns

Catégories : Cloud Computing, Data Engineering | Tags : GCP, BigQuery, Schema, SQL

Google’s BigQuery is a cloud data warehousing system designed to process enormous volumes of data with several features available. Out of all those features, let’s talk about the support of Struct…

Par César BEREZOWSKI

22 nov. 2019

Machine Learning model deployment

Catégories : Big Data, Data Engineering, Data Science, DevOps & SRE | Tags : DevOps, Operation, AI, Cloud, Machine Learning, MLOps, On-premises, Schema

“Enterprise Machine Learning requires looking at the big picture […] from a data engineering and a data platform perspective,” lectured Justin Norman during the talk on the deployment of Machine…

Par Oskar RYNKIEWICZ

30 sept. 2019

Spark Streaming part 4: clustering with Spark MLlib

Catégories : Data Engineering, Data Science, Learning | Tags : Spark, Apache Spark Streaming, Big Data, Clustering, Machine Learning, Scala, Streaming

Spark MLlib is an Apache’s Spark library offering scalable implementations of various supervised and unsupervised Machine Learning algorithms. Thus, Spark framework can serve as a platform for…

Par Oskar RYNKIEWICZ

27 juin 2019

Spark Streaming part 3: DevOps, tools and tests for Spark applications

Catégories : Big Data, Data Engineering, DevOps & SRE | Tags : DevOps, Learning and tutorial, Spark, Apache Spark Streaming

Whenever services are unavailable, businesses experience large financial losses. Spark Streaming applications can break, like any other software application. A streaming application operates on data…

Par Oskar RYNKIEWICZ

31 mai 2019

Spark Streaming part 2: run Spark Structured Streaming pipelines in Hadoop

Catégories : Data Engineering, Learning | Tags : Spark, Apache Spark Streaming, Python, Streaming

Spark can process streaming data on a multi-node Hadoop cluster relying on HDFS for the storage and YARN for the scheduling of jobs. Thus, Spark Structured Streaming integrates well with Big Data…

Par Oskar RYNKIEWICZ

28 mai 2019

Spark Streaming part 1: build data pipelines with Spark Structured Streaming

Catégories : Data Engineering, Learning | Tags : Kafka, Spark, Apache Spark Streaming, Big Data, Streaming

Spark Structured Streaming is a new engine introduced with Apache Spark 2 used for processing streaming data. It is built on top of the existing Spark SQL engine and the Spark DataFrame. The…

Par Oskar RYNKIEWICZ

18 avr. 2019

Publish Spark SQL DataFrame and RDD with Spark Thrift Server

Catégories : Data Engineering | Tags : Thrift, JDBC, Hadoop, Hive, Spark, SQL

The distributed and in-memory nature of the Spark engine makes it an excellent candidate to expose data to clients which expect low latencies. Dashboards, notebooks, BI studios, KPIs-based reports…

Par Oskar RYNKIEWICZ

25 mars 2019

Apache Flink: past, present and future

Catégories : Data Engineering | Tags : Pipeline, Flink, Kubernetes, Machine Learning, SQL, Streaming

Apache Flink is a little gem which deserves a lot more attention. Let’s dive into Flink’s past, its current state and the future it is heading to by following the keynotes and presentations at Flink…

Par César BEREZOWSKI

5 nov. 2018

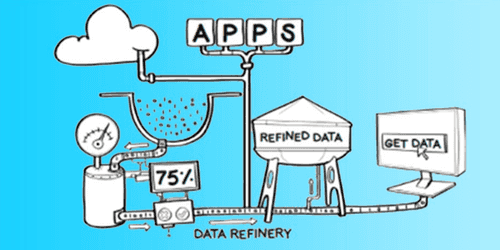

Data Lake ingestion best practices

Catégories : Big Data, Data Engineering | Tags : Data Governance, HDF, Operation, Avro, Hive, NiFi, ORC, Spark, Data Lake, File Format, Protocol Buffers, Registry, Schema

Creating a Data Lake requires rigor and experience. Here are some good practices around data ingestion both for batch and stream architectures that we recommend and implement with our customers…

Par David WORMS

18 juin 2018

Apache Beam: a unified programming model for data processing pipelines

Catégories : Data Engineering, DataWorks Summit 2018 | Tags : Apex, Beam, Pipeline, Flink, Spark

In this article, we will review the concepts, the history and the future of Apache Beam, that may well become the new standard for data processing pipelines definition. At Dataworks Summit 2018 in…

Par Gauthier LEONARD

24 mai 2018

What's new in Apache Spark 2.3?

Catégories : Data Engineering, DataWorks Summit 2018 | Tags : Arrow, PySpark, Tuning, ORC, Spark, Spark MLlib, Data Science, Docker, Kubernetes, pandas, Streaming

Let’s dive into the new features offered by the 2.3 distribution of Apache Spark. This article is a composition of the following talks seen at the DataWorks Summit 2018 and additional research: Apache…

Par César BEREZOWSKI

23 mai 2018

Execute Python in an Oozie workflow

Catégories : Data Engineering | Tags : Oozie, Elasticsearch, Python, REST

Oozie workflows allow you to use multiple actions to execute code, however doing so with Python can be a bit tricky, let’s see how to do that. I’ve recently designed a workflow that would interact…

Par César BEREZOWSKI

6 mars 2018

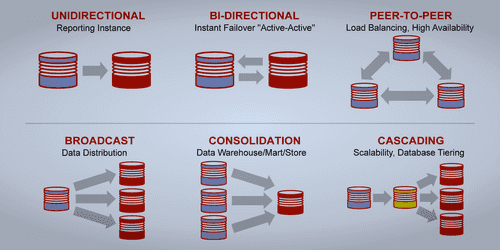

Oracle DB synchrnozation to Hadoop with CDC

Catégories : Data Engineering | Tags : CDC, GoldenGate, Oracle, Hive, Sqoop, Data Warehouse

This note is the result of a discussion about the synchronization of data written in a database to a warehouse stored in Hadoop. Thanks to Claude Daub from GFI who wrote it and who authorizes us to…

Par David WORMS

13 juil. 2017

EclairJS - Putting a Spark in Web Apps

Catégories : Data Engineering, Front End | Tags : Spark, JavaScript, Jupyter

Presentation by David Fallside from IBM, images extracted from the presentation. Introduction Web Apps development has moved from Java to NodeJS and Javascript. It provides a simple and rich…

Par David WORMS

17 juil. 2016

Splitting HDFS files into multiple hive tables

Catégories : Data Engineering | Tags : Flume, Pig, HDFS, Hive, Oozie, SQL

I am going to show how to split a CSV file stored inside HDFS as multiple Hive tables based on the content of each record. The context is simple. We are using Flume to collect logs from all over our…

Par David WORMS

15 sept. 2013

Testing the Oracle SQL Connector for Hadoop HDFS

Catégories : Data Engineering | Tags : Database, File system, Oracle, HDFS, CDH, SQL

Using Oracle SQL Connector for HDFS, you can use Oracle Database to access and analyze data residing in HDFS files or a Hive table. You can also query and join data in HDFS or a Hive table with other…

Par David WORMS

15 juil. 2013

Options to connect and integrate Hadoop with Oracle

Catégories : Data Engineering | Tags : Database, Java, Oracle, R, RDBMS, Avro, HDFS, Hive, MapReduce, Sqoop, NoSQL, SQL

I will list the different tools and libraries available to us developers in order to integrate Oracle and Hadoop. The Oracle SQL Connector for HDFS described below is covered in a follow up article…

Par David WORMS

15 mai 2013

Two Hive UDAF to convert an aggregation to a map

Catégories : Data Engineering | Tags : Java, HBase, Hive, File Format

I am publishing two new Hive UDAF to help with maps in Apache Hive. The source code is available on GitHub in two Java classes: “UDAFToMap” and “UDAFToOrderedMap” or you can download the jar file. The…

Par David WORMS

6 mars 2012

Timeseries storage in Hadoop and Hive

Catégories : Data Engineering | Tags : CRM, timeseries, Tuning, Hadoop, HDFS, Hive, File Format

In the next few weeks, we will be exploring the storage and analytic of a large generated dataset. This dataset is composed of CRM tables associated to one timeserie table of about 7,000 billiard rows…

Par David WORMS

10 janv. 2012