Python

Python is a dynamic, interpretive and scripted programming language. It was developed at the beginning of the 1990s by Guido van Rossum. Today, this language is being developed as an open-source project by many developers worldwide, led by Guido through the Python Software Foundation (PSF). The goal of the language is to develop an easy-to-learn programming language producing an intuitive and readable code while remaining as powerful as other established programming languages.

Related articles

Node.js is now integrated to the Microsoft Azure platform

Categories: Cloud Computing, Tech Radar | Tags: Linux, Azure, Cloud, Node.js

Node is now a first class citizen in the Microsoft Azure cloud environment alongside .Net, Java and PHP. This integration is the logical consequence of Microsoft’s involvement in the development of…

By David WORMS

Dec 11, 2011

E-commerce electronic cigarettes: first impressions with Prestashop

Categories: Tech Radar | Tags: HTML, Java, Node.js

Last year, I had to select and integrate an e-commerce software for the website CigarHit selling electronic cigarettes. Considering that the last e-commerce integration I made dated from 2005, I took…

By David WORMS

Jul 25, 2012

Splitting HDFS files into multiple hive tables

Categories: Data Engineering | Tags: Flume, Pig, HDFS, Hive, Oozie, SQL

I am going to show how to split a CSV file stored inside HDFS as multiple Hive tables based on the content of each record. The context is simple. We are using Flume to collect logs from all over our…

By David WORMS

Sep 15, 2013

Node.js, JavaScript on the server side

Categories: Front End, Node.js | Tags: HTTP, Server, JavaScript, Node.js

Waiting for the Next Big Language (NBL for Next Big Language), this is now 3 years or more since I predict to my customers a bright future for JavaScript as a programming language for server…

By David WORMS

Jun 12, 2010

Get in control of your workflows with Apache Airflow

Categories: Big Data, Tech Radar | Tags: DevOps, Airflow, Cloud, Python

Below is a compilation of my notes taken during the presentation of Apache Airflow by Christian Trebing from BlueYonder. Introduction Use case: how to handle data coming in regularly from customers…

Jul 17, 2016

HDP cluster monitoring

Categories: Big Data, DevOps & SRE, Infrastructure | Tags: Alert, Ambari, Metrics, Monitoring, HDP, REST

With the current growth of BigData technologies, more and more companies are building their own clusters in hope to get some value of their data. One main concern while building these infrastructures…

Jul 5, 2017

Cloudera Sessions Paris 2017

Categories: Big Data, Events | Tags: Altus, CDSW, SDX, EC2, Azure, Cloudera, CDH, Data Science, PaaS

Adaltas was at the Cloudera Sessions on October 5, where Cloudera showcased their new products and offerings. Below you’ll find a summary of what we witnessed. Note: the information were aggregated in…

Oct 16, 2017

From Dockerfile to Ansible Containers

Categories: Containers Orchestration, DevOps & SRE, Open Source Summit Europe 2017 | Tags: pip, Shell, Ansible, Docker, Docker Compose, YAML

This talk was an introduction to the Dockerfile format and to Ansible container’s tool and then a comparison of both. It was hold by Tomas Tomecek from Red Hat’s containerization team. The Dockerfile…

Oct 25, 2017

Execute Python in an Oozie workflow

Categories: Data Engineering | Tags: Oozie, Elasticsearch, Python, REST

Oozie workflows allow you to use multiple actions to execute code, however doing so with Python can be a bit tricky, let’s see how to do that. I’ve recently designed a workflow that would interact…

Mar 6, 2018

What's new in Apache Spark 2.3?

Categories: Data Engineering, DataWorks Summit 2018 | Tags: Arrow, PySpark, Tuning, ORC, Spark, Spark MLlib, Data Science, Docker, Kubernetes, pandas, Streaming

Let’s dive into the new features offered by the 2.3 distribution of Apache Spark. This article is a composition of the following talks seen at the DataWorks Summit 2018 and additional research: Apache…

May 23, 2018

Present and future of Hadoop workflow scheduling: Oozie 5.x

Categories: Big Data, DataWorks Summit 2018 | Tags: Hadoop, Hive, Oozie, Sqoop, CDH, HDP, REST

During the DataWorks Summit Europe 2018 in Berlin, I had the opportunity to attend a breakout session on Apache Oozie. It covers the new features released in Oozie 5.0, including future features of…

May 23, 2018

Apache Beam: a unified programming model for data processing pipelines

Categories: Data Engineering, DataWorks Summit 2018 | Tags: Apex, Beam, Pipeline, Flink, Spark

In this article, we will review the concepts, the history and the future of Apache Beam, that may well become the new standard for data processing pipelines definition. At Dataworks Summit 2018 in…

May 24, 2018

TensorFlow on Spark 2.3: The Best of Both Worlds

Categories: Data Science, DataWorks Summit 2018 | Tags: Mesos, C++, CPU, GPU, Tuning, Spark, YARN, JavaScript, Keras, Kubernetes, Machine Learning, Python, TensorFlow

The integration of TensorFlow With Spark has a lot of potential and creates new opportunities. This article is based on a conference seen at the DataWorks Summit 2018 in Berlin. It was about the new…

By Yliess HATI

May 29, 2018

Lando: Deep Learning used to summarize conversations

Categories: Data Science, Learning | Tags: Micro Services, Open API, Deep Learning, Internship, Kubernetes, Neural Network, Node.js

Lando is an application to summarize conversations using Speech To Text to translate the written record of a meeting into text and Deep Learning technics to summarize contents. It allows users to…

By Yliess HATI

Sep 18, 2018

Jumbo, the Hadoop cluster bootstrapper

Categories: Infrastructure | Tags: Ambari, Automation, Ansible, Cluster, Vagrant, HDP, REST

Introducing Jumbo, a Hadoop cluster bootstrapper for developers. Jumbo helps you deploy development environments for Big Data technologies. It takes a few minutes to get a custom virtualized Hadoop…

Nov 29, 2018

CodaLab – Data Science competitions

Categories: Data Science, Adaltas Summit 2018, Learning | Tags: Database, Infrastructure, Machine Learning, MySQL, Node.js, Python

CodaLab Competition is a platform for code execution in the field of Data Science. It is a web interface on which a user can submit code or results and compare themselves to others. Let’s see how it…

Dec 17, 2018

Applying Deep Reinforcement Learning to Poker

Categories: Data Science | Tags: Algorithm, Gaming, Q-learning, Deep Learning, Machine Learning, Neural Network, Python

We will cover the subject of Deep Reinforcement Learning, more specifically the Deep Q Learning algorithm introduced by DeepMind, and then we’ll apply a version of this algorithm to the game of Poker…

Jan 9, 2019

Monitoring a production Hadoop cluster with Kubernetes

Categories: DevOps & SRE | Tags: Thrift, Shinken, Hadoop, Knox, Cluster, Docker, Elasticsearch, Grafana, Kubernetes, Node, Node.js, Prometheus, Python

Monitoring a production grade Hadoop cluster is a real challenge and needs to be constantly evolving. The software we use today is based on Nagios. Very efficient when it comes to the simplest…

Dec 21, 2018

Publish Spark SQL DataFrame and RDD with Spark Thrift Server

Categories: Data Engineering | Tags: Thrift, JDBC, Hadoop, Hive, Spark, SQL

The distributed and in-memory nature of the Spark engine makes it an excellent candidate to expose data to clients which expect low latencies. Dashboards, notebooks, BI studios, KPIs-based reports…

Mar 25, 2019

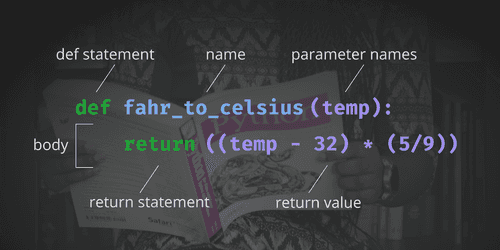

First Class Functions in Python

Categories: Hack, Learning | Tags: Programming, Python

I recently watched a talk by Dave Cheney about first class functions in Go. Python supports first class functions too, so can we use them in the same ways? Absolutely. I have been using Python for a…

Apr 15, 2019

Auto-scaling Druid with Kubernetes

Categories: Big Data, Business Intelligence, Containers Orchestration | Tags: Helm, Metrics, OLAP, Operation, Container Orchestration, EC2, Druid, Cloud, CNCF, Data Analytics, Kubernetes, Prometheus, Python

Apache Druid is an open-source analytics data store which could leverage the auto-scaling abilities of Kubernetes due to its distributed nature and its reliance on memory. I was inspired by the talk…

Jul 16, 2019

Running Apache Hive 3, new features and tips and tricks

Categories: Big Data, Business Intelligence, DataWorks Summit 2019 | Tags: JDBC, LLAP, Druid, Hadoop, Hive, Kafka, Release and features

Apache Hive 3 brings a bunch of new and nice features to the data warehouse. Unfortunately, like many major FOSS releases, it comes with a few bugs and not much documentation. It is available since…

Jul 25, 2019

TensorFlow installation on Docker

Categories: Containers Orchestration, Data Science, Learning | Tags: CPU, Linux, AI, Deep Learning, Docker, Jupyter, TensorFlow

TensorFlow is an Open Source software from Google for numerical computation using a graph representation: Vertex (nodes) represent mathematical operations Edges represent N-dimensional data array…

Aug 5, 2019

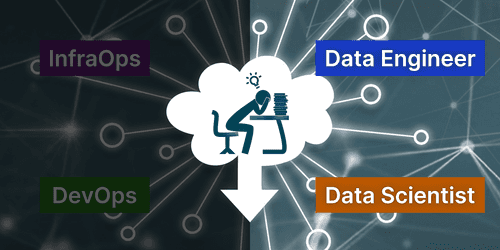

Machine Learning model deployment

Categories: Big Data, Data Engineering, Data Science, DevOps & SRE | Tags: DevOps, Operation, AI, Cloud, Machine Learning, MLOps, On-premises, Schema

“Enterprise Machine Learning requires looking at the big picture […] from a data engineering and a data platform perspective,” lectured Justin Norman during the talk on the deployment of Machine…

Sep 30, 2019

Internship Data Science & Data Engineer - ML in production and streaming data ingestion

Categories: Data Engineering, Data Science | Tags: DevOps, Flink, Hadoop, HBase, Kafka, Spark, Internship, Kubernetes, Python

Context The exponential evolution of data has turned the industry upside down by redefining data storage, processing and data ingestion pipelines. Mastering these methods considerably facilitates…

By David WORMS

Nov 26, 2019

Spark Streaming part 2: run Spark Structured Streaming pipelines in Hadoop

Categories: Data Engineering, Learning | Tags: Spark, Apache Spark Streaming, Python, Streaming

Spark can process streaming data on a multi-node Hadoop cluster relying on HDFS for the storage and YARN for the scheduling of jobs. Thus, Spark Structured Streaming integrates well with Big Data…

May 28, 2019

Spark Streaming part 3: DevOps, tools and tests for Spark applications

Categories: Big Data, Data Engineering, DevOps & SRE | Tags: DevOps, Learning and tutorial, Spark, Apache Spark Streaming

Whenever services are unavailable, businesses experience large financial losses. Spark Streaming applications can break, like any other software application. A streaming application operates on data…

May 31, 2019

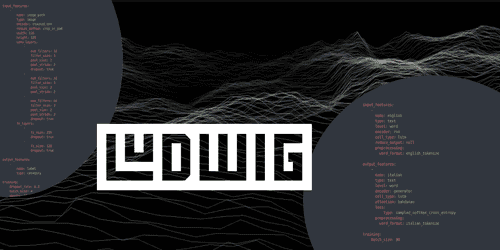

Introduction to Ludwig and how to deploy a Deep Learning model via Flask

Categories: Data Science, Tech Radar | Tags: Learning and tutorial, Deep Learning, Ludwig Deep Learning Toolbox, Machine Learning, Python

Over the past decade, Machine Learning and deep learning models have proven to be very effective in performing a wide variety of tasks such as fraud detection, product recommendation, autonomous…

Mar 2, 2020

MLflow tutorial: an open source Machine Learning (ML) platform

Categories: Data Engineering, Data Science, Learning | Tags: AWS, Azure, Databricks, Deep Learning, Deployment, Machine Learning, MLflow, MLOps, Python, Scikit-learn

Introduction and principles of MLflow With increasingly cheaper computing power and storage and at the same time increasing data collection in all walks of life, many companies integrated Data Science…

Mar 23, 2020

Introducing Apache Airflow on AWS

Categories: Big Data, Cloud Computing, Containers Orchestration | Tags: PySpark, Learning and tutorial, Airflow, Oozie, Spark, AWS, Docker, Python

Apache Airflow offers a potential solution to the growing challenge of managing an increasingly complex landscape of data management tools, scripts and analytics processes. It is an open-source…

May 5, 2020

Importing data to Databricks: external tables and Delta Lake

Categories: Data Engineering, Data Science, Learning | Tags: Parquet, AWS, Amazon S3, Azure Data Lake Storage (ADLS), Databricks, Delta Lake, Python

During a Machine Learning project we need to keep track of the training data we are using. This is important for audit purposes and for assessing the performance of the models, developed at a later…

May 21, 2020

Optimization of Spark applications in Hadoop YARN

Categories: Data Engineering, Learning | Tags: Tuning, Hadoop, Spark, Python

Apache Spark is an in-memory data processing tool widely used in companies to deal with Big Data issues. Running a Spark application in production requires user-defined resources. This article…

Mar 30, 2020

Experiment tracking with MLflow on Databricks Community Edition

Categories: Data Engineering, Data Science, Learning | Tags: Spark, Databricks, Deep Learning, Delta Lake, Machine Learning, MLflow, Notebook, Python, Scikit-learn

Introduction to Databricks Community Edition and MLflow Every day the number of tools helping Data Scientists to build models faster increases. Consequently, the need to manage the results and the…

Sep 10, 2020

Faster model development with H2O AutoML and Flow

Categories: Data Science, Learning | Tags: Automation, Cloud, H2O, Machine Learning, MLOps, On-premises, Open source, Python

Building Machine Learning (ML) models is a time-consuming process. It requires expertise in statistics, ML algorithms, and programming. On top of that, it also requires the ability to translate a…

Dec 10, 2020

TensorFlow Extended (TFX): the components and their functionalities

Categories: Big Data, Data Engineering, Data Science, Learning | Tags: Beam, Data Engineering, Pipeline, CI/CD, Data Science, Deep Learning, Deployment, Machine Learning, MLOps, Open source, Python, TensorFlow

Putting Machine Learning (ML) and Deep Learning (DL) models in production certainly is a difficult task. It has been recognized as more failure-prone and time consuming than the modeling itself, yet…

Mar 5, 2021

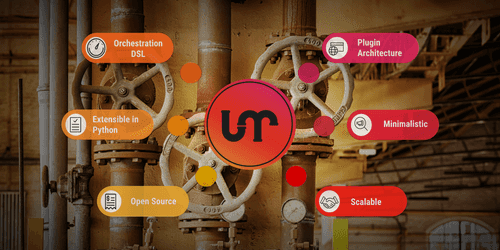

Apache Liminal: when MLOps meets GitOps

Categories: Big Data, Containers Orchestration, Data Engineering, Data Science, Tech Radar | Tags: Data Engineering, CI/CD, Data Science, Deep Learning, Deployment, Docker, GitOps, Kubernetes, Machine Learning, MLOps, Open source, Python, TensorFlow

Apache Liminal is an open-source software which proposes a solution to deploy end-to-end Machine Learning pipelines. Indeed it permits to centralize all the steps needed to construct Machine Learning…

Mar 31, 2021

Modern Python part 2: write unit tests & enforce Git commit conventions

Categories: DevOps & SRE | Tags: Git, pandas, Python, Unit tests

Good software engineering practices always bring a lot of long-term benefits. For example, writing unit tests permits you to maintain large codebases and ensures that a specific piece of your code…

By Faouzi BRAZA

Jun 24, 2021

Modern Python part 1: start a project with pyenv & poetry

Categories: DevOps & SRE | Tags: Git, Python, Release and features, Unit tests

When learning a programming language, the focus is essentially on understanding the syntax, the code style, and the underlying concepts. With time, you become sufficiently comfortable with the…

By Faouzi BRAZA

Jun 9, 2021

Modern Python part 3: run a CI pipeline & publish your package to PiPy

Categories: DevOps & SRE | Tags: CI/CD, Git, GitHub, Python, Release and features, Unit tests

To propose a well-maintained and usable Python package to the open-source community or even inside your company, you are expected to accomplish a set of critical steps. First ensure that your code is…

By Faouzi BRAZA

Jun 28, 2021

H2O in practice: a Data Scientist feedback

Categories: Data Science, Learning | Tags: Automation, Cloud, H2O, Machine Learning, MLOps, On-premises, Open source, Python

Automated machine learning (AutoML) platforms are gaining popularity and becoming a new important tool in the data scientists’ toolbox. A few months ago, I introduced H2O, an open-source platform for…

Sep 29, 2021

H2O in practice: a protocol combining AutoML with traditional modeling approaches

Categories: Data Science, Learning | Tags: Automation, Cloud, H2O, Machine Learning, MLOps, On-premises, Open source, Python, XGBoost

H20 comes with a lot of functionalities. The second part of the series H2O in practice proposes a protocol to combine AutoML modeling with traditional modeling and optimization approach. The objective…

Nov 12, 2021

Ansible variables: choosing the right location

Categories: DevOps & SRE | Tags: Infrastructure, Ansible, IaC, YAML

Defining variables for your Ansible playbooks and roles can become challenging as your project grows. Browsing the Ansible documentation, the diversity of Ansible variables location is confusing, to…

Mar 15, 2022

Spark on Hadoop integration with Jupyter

Categories: Adaltas Summit 2021, Infrastructure, Tech Radar | Tags: Infrastructure, Spark, YARN, CDP, HDP, Jupyter, Notebook, TDP

For several years, Jupyter notebook has established itself as the notebook solution in the Python universe. Historically, Jupyter is the tool of choice for data scientists who mainly develop in Python…

Sep 1, 2022

Dive into tdp-lib, the SDK in charge of TDP cluster management

Categories: Big Data, Infrastructure | Tags: Programming, Ansible, Hadoop, Python, TDP

All the deployments are automated and Ansible plays a central role. With the growing complexity of the code base, a new system was needed to overcome the Ansible limitations which will enable us to…

Jan 24, 2023

New TDP website launched

Categories: Big Data | Tags: Programming, Ansible, Hadoop, Python, TDP

The new TDP (Trunk Data Platform) website is online. We invite you to browse its pages to discover the platform, stay informed, and cultivate contact with the TDP community. TDP is a completely open…

By David WORMS

Oct 3, 2023